mirror of https://github.com/nocodb/nocodb

20 changed files with 866 additions and 123 deletions

@ -0,0 +1,16 @@

|

||||

--- |

||||

title: "Architecture Overview" |

||||

description: "Simple overview of NocoDB architecture" |

||||

hide_table_of_contents: true |

||||

--- |

||||

|

||||

By default, if `NC_DB` is not specified, then SQLite will be used to store your metadata. We suggest users to separate the metadata and user data in different databases. |

||||

|

||||

|

||||

|

||||

|

||||

| Project Type | Metadata stored in | Data stored in | |

||||

|---------|-----------|--------| |

||||

| Create new project | NC_DB | NC_DB | |

||||

| Create new project with External Database | NC_DB | External Database | |

||||

| Create new project from Excel | NC_DB | NC_DB | |

||||

@ -0,0 +1,23 @@

|

||||

--- |

||||

title: "Repository structure" |

||||

description: "Repository Structure" |

||||

hide_table_of_contents: true |

||||

--- |

||||

|

||||

We use ``Lerna`` to manage multi-packages. We have the following [packages](https://github.com/nocodb/nocodb/tree/master/packages). |

||||

|

||||

- ``packages/nc-cli`` : A CLI to create NocoDB app. |

||||

|

||||

- ``packages/nocodb-sdk``: API client sdk of nocodb. |

||||

|

||||

- ``packages/nc-gui``: NocoDB Frontend. |

||||

|

||||

- ``packages/nc-lib-gui``: The build version of ``nc-gui`` which will be used in ``packages/nocodb``. |

||||

|

||||

- ``packages/nc-plugin``: Plugin template. |

||||

|

||||

- ``packages/noco-blog``: NocoDB Blog which will be auto-released to [nocodb/noco-blog](https://github.com/nocodb/noco-blog). |

||||

|

||||

- ``packages/noco-docs``: NocoDB Documentation which will be auto-released to [nocodb/noco-docs](https://github.com/nocodb/noco-docs). |

||||

|

||||

- ``packages/nocodb``: NocoDB Backend, hosted in [NPM](https://www.npmjs.com/package/nocodb). |

||||

@ -0,0 +1,50 @@

|

||||

--- |

||||

title: "Development Setup" |

||||

description: "How to set-up your development environment" |

||||

--- |

||||

|

||||

## Clone the repo |

||||

|

||||

```bash |

||||

git clone https://github.com/nocodb/nocodb |

||||

# change directory to the project root |

||||

cd nocodb |

||||

``` |

||||

|

||||

## Install dependencies |

||||

|

||||

```bash |

||||

# run from the project root |

||||

pnpm i |

||||

``` |

||||

|

||||

## Start Frontend |

||||

|

||||

```bash |

||||

# run from the project root |

||||

pnpm start:frontend |

||||

# runs on port 3000 |

||||

``` |

||||

|

||||

## Start Backend |

||||

|

||||

```bash |

||||

# run from the project root |

||||

pnpm start:backend |

||||

# runs on port 8080 |

||||

``` |

||||

|

||||

Any changes made to frontend and backend will be automatically reflected in the browser. |

||||

|

||||

## Enabling CI-CD for Draft PR |

||||

|

||||

CI-CD will be triggered on moving a PR from draft state to `Ready for review` state & on pushing changes to `Develop`. To verify CI-CD before requesting for review, add label `trigger-CI` on Draft PR. |

||||

|

||||

## Accessing CI-CD Failure Screenshots |

||||

|

||||

For Playwright tests, screenshots are captured on the tests. These will provide vital clues for debugging possible issues observed in CI-CD. To access screenshots, Open link associated with CI-CD run & click on `Artifacts` |

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@ -0,0 +1,189 @@

|

||||

--- |

||||

title: "Writing Unit Tests" |

||||

description: "Overview to Unit Testing" |

||||

--- |

||||

|

||||

## Unit Tests |

||||

|

||||

- All individual unit tests are independent of each other. We don't use any shared state between tests. |

||||

- Test environment includes `sakila` sample database and any change to it by a test is reverted before running other tests. |

||||

- While running unit tests, it tries to connect to mysql server running on `localhost:3306` with username `root` and password `password` (which can be configured) and if not found, it will use `sqlite` as a fallback, hence no requirement of any sql server to run tests. |

||||

|

||||

### Pre-requisites |

||||

|

||||

- MySQL is preferred - however tests can fallback on SQLite too |

||||

|

||||

### Setup |

||||

|

||||

```bash |

||||

pnpm --filter=-nocodb install |

||||

|

||||

# add a .env file |

||||

cp tests/unit/.env.sample tests/unit/.env |

||||

|

||||

# open .env file |

||||

open tests/unit/.env |

||||

``` |

||||

|

||||

Configure the following variables |

||||

> DB_HOST : host |

||||

> DB_PORT : port |

||||

> DB_USER : username |

||||

> DB_PASSWORD : password |

||||

|

||||

### Run Tests |

||||

|

||||

``` bash |

||||

pnpm run test:unit |

||||

``` |

||||

|

||||

### Folder Structure |

||||

|

||||

The root folder for unit tests is `packages/nocodb/tests/unit` |

||||

|

||||

- `rest` folder contains all the test suites for rest apis. |

||||

- `model` folder contains all the test suites for models. |

||||

- `factory` folder contains all the helper functions to create test data. |

||||

- `init` folder contains helper functions to configure test environment. |

||||

- `index.test.ts` is the root test suite file which imports all the test suites. |

||||

- `TestDbMngr.ts` is a helper class to manage test databases (i.e. creating, dropping, etc.). |

||||

|

||||

### Factory Pattern |

||||

|

||||

- Use factories for create/update/delete data. No data should be directly create/updated/deleted in the test. |

||||

- While writing a factory make sure that it can be used with as less parameters as possible and use default values for other parameters. |

||||

- Use named parameters for factories. |

||||

```ts |

||||

createUser({ email, password}) |

||||

``` |

||||

- Use one file per factory. |

||||

|

||||

|

||||

### Walk through of writing a Unit Test |

||||

|

||||

We will create an `Table` test suite as an example. |

||||

|

||||

#### Configure test |

||||

|

||||

We will configure `beforeEach` which is called before each test is executed. We will use `init` function from `nocodb/packages/nocodb/tests/unit/init/index.ts`, which is a helper function which configures the test environment(i.e resetting state, etc.). |

||||

|

||||

`init` does the following things - |

||||

|

||||

- It initializes a `Noco` instance(reused in all tests). |

||||

- Restores `meta` and `sakila` database to its initial state. |

||||

- Creates the root user. |

||||

- Returns `context` which has `auth token` for the created user, node server instance(`app`), and `dbConfig`. |

||||

|

||||

We will use `createProject` and `createProject` factories to create a project and a table. |

||||

|

||||

```typescript |

||||

let context; |

||||

|

||||

beforeEach(async function () { |

||||

context = await init(); |

||||

|

||||

project = await createProject(context); |

||||

table = await createTable(context, project); |

||||

}); |

||||

``` |

||||

|

||||

#### Test case |

||||

|

||||

We will use `it` function to create a test case. We will use `supertest` to make a request to the server. We use `expect`(`chai`) to assert the response. |

||||

|

||||

```typescript |

||||

it('Get table list', async function () { |

||||

const response = await request(context.app) |

||||

.get(`/api/v1/db/meta/projects/${project.id}/tables`) |

||||

.set('xc-auth', context.token) |

||||

.send({}) |

||||

.expect(200); |

||||

|

||||

expect(response.body.list).to.be.an('array').not.empty; |

||||

}); |

||||

``` |

||||

|

||||

:::info |

||||

|

||||

We can also run individual test by using `.only` in `describe` or `it` function and the running the test command. |

||||

|

||||

::: |

||||

|

||||

```typescript |

||||

it.only('Get table list', async () => { |

||||

``` |

||||

|

||||

#### Integrating the New Test Suite |

||||

|

||||

We create a new file `table.test.ts` in `packages/nocodb/tests/unit/rest/tests` directory. |

||||

|

||||

```typescript |

||||

import 'mocha'; |

||||

import request from 'supertest'; |

||||

import init from '../../init'; |

||||

import { createTable, getAllTables } from '../../factory/table'; |

||||

import { createProject } from '../../factory/project'; |

||||

import { defaultColumns } from '../../factory/column'; |

||||

import Model from '../../../../src/lib/models/Model'; |

||||

import { expect } from 'chai'; |

||||

|

||||

function tableTest() { |

||||

let context; |

||||

let project; |

||||

let table; |

||||

|

||||

beforeEach(async function () { |

||||

context = await init(); |

||||

|

||||

project = await createProject(context); |

||||

table = await createTable(context, project); |

||||

}); |

||||

|

||||

it('Get table list', async function () { |

||||

const response = await request(context.app) |

||||

.get(`/api/v1/db/meta/projects/${project.id}/tables`) |

||||

.set('xc-auth', context.token) |

||||

.send({}) |

||||

.expect(200); |

||||

|

||||

expect(response.body.list).to.be.an('array').not.empty; |

||||

}); |

||||

} |

||||

|

||||

export default function () { |

||||

describe('Table', tableTests); |

||||

} |

||||

``` |

||||

|

||||

We can then import the `Table` test suite to `Rest` test suite in `packages/nocodb/tests/unit/rest/index.test.ts` file(`Rest` test suite is imported in the root test suite file which is `packages/nocodb/tests/unit/index.test.ts`). |

||||

|

||||

### Seeding Sample DB (Sakila) |

||||

|

||||

```typescript |

||||

|

||||

function tableTest() { |

||||

let context; |

||||

let sakilaProject: Project; |

||||

let customerTable: Model; |

||||

|

||||

beforeEach(async function () { |

||||

context = await init(); |

||||

|

||||

/******* Start : Seeding sample database **********/ |

||||

sakilaProject = await createSakilaProject(context); |

||||

/******* End : Seeding sample database **********/ |

||||

|

||||

customerTable = await getTable({project: sakilaProject, name: 'customer'}) |

||||

}); |

||||

|

||||

it('Get table data list', async function () { |

||||

const response = await request(context.app) |

||||

.get(`/api/v1/db/data/noco/${sakilaProject.id}/${customerTable.id}`) |

||||

.set('xc-auth', context.token) |

||||

.send({}) |

||||

.expect(200); |

||||

|

||||

expect(response.body.list[0]['FirstName']).to.equal('MARY'); |

||||

}); |

||||

} |

||||

``` |

||||

@ -0,0 +1,218 @@

|

||||

--- |

||||

title: "Playwright E2E Testing" |

||||

description: "Overview to playwright based e2e tests" |

||||

--- |

||||

|

||||

## How to run tests |

||||

|

||||

All the tests reside in `tests/playwright` folder. |

||||

|

||||

Make sure to install the dependencies (in the playwright folder): |

||||

|

||||

```bash |

||||

pnpm --filter=playwright install |

||||

pnpm exec playwright install --with-deps chromium |

||||

``` |

||||

|

||||

### Run Test Server |

||||

|

||||

Start the backend test server (in `packages/nocodb` folder): |

||||

|

||||

```bash |

||||

pnpm run watch:run:playwright |

||||

``` |

||||

|

||||

Start the frontend test server (in `packages/nc-gui` folder): |

||||

|

||||

```bash |

||||

NUXT_PAGE_TRANSITION_DISABLE=true pnpm run dev |

||||

``` |

||||

|

||||

### Running all tests |

||||

|

||||

For selecting db type, rename `.env.example` to `.env` and set `E2E_DEV_DB_TYPE` to `sqlite`(default), `mysql` or `pg`. |

||||

|

||||

headless mode(without opening browser): |

||||

|

||||

```bash |

||||

pnpm run test |

||||

``` |

||||

|

||||

with browser: |

||||

|

||||

```bash |

||||

pnpm run test:debug |

||||

``` |

||||

|

||||

For setting up mysql(sakila): |

||||

|

||||

```bash |

||||

docker-compose -f ./tests/playwright/scripts/docker-compose-mysql-playwright.yml up -d |

||||

``` |

||||

|

||||

For setting up postgres(sakila): |

||||

|

||||

```bash |

||||

docker-compose -f ./tests/playwright/scripts/docker-compose-playwright-pg.yml |

||||

``` |

||||

|

||||

### Running individual tests |

||||

|

||||

Add `.only` to the test you want to run: |

||||

|

||||

```js |

||||

test.only('should login', async ({ page }) => { |

||||

// ... |

||||

}) |

||||

``` |

||||

|

||||

```bash |

||||

pnpm run test |

||||

``` |

||||

|

||||

## Concepts |

||||

|

||||

### Independent tests |

||||

|

||||

- All tests are independent of each other. |

||||

- Each test starts with a fresh project with a fresh sakila database(option to not use sakila db is also there). |

||||

- Each test creates a new user(email as `user@nocodb.com`) and logs in with that user to the dashboard. |

||||

|

||||

Caveats: |

||||

|

||||

- Some stuffs are shared i.e, users, plugins etc. So be catious while writing tests touching that. A fix for this is in the works. |

||||

- In test, we prefix email and project with the test id, which will be deleted after the test is done. |

||||

|

||||

### What to test |

||||

|

||||

- UI verification. This includes verifying the state of the UI element, i.e if the element is visible, if the element has a particular text etc. |

||||

- Test should verify all user flow. A test has a default timeout of 60 seconds. If a test is taking more than 60 seconds, it is a sign that the test should be broken down into smaller tests. |

||||

- Test should also verify all the side effects the feature(i.e. On adding a new column type, should verify column deletion as well) will have, and also error cases. |

||||

- Test name should be descriptive. It should be easy to understand what the test is doing by just reading the test name. |

||||

|

||||

### Playwright |

||||

|

||||

- Playwright is a nodejs library for automating chromium, firefox and webkit. |

||||

- For each test, a new browser context is created. This means that each test runs in a new incognito window. |

||||

- For assertion always use `expect` from `@playwright/test` library. This library provides a lot of useful assertions, which also has retry logic built in. |

||||

|

||||

## Page Objects |

||||

|

||||

- Page objects are used to abstract over the components/page. This makes the tests more readable and maintainable. |

||||

- All page objects are in `tests/playwright/pages` folder. |

||||

- All the test related code should be in page objects. |

||||

- Methods should be as thin as possible and its better to have multiple methods than one big method, which improves reusability. |

||||

|

||||

The methods of a page object can be classified into 2 categories: |

||||

|

||||

- Actions: Performs an UI actions like click, type, select etc. Is also responsible for waiting for the element to be ready and the action to be performed. This included waiting for API calls to complete. |

||||

- Assertions: Asserts the state of the UI element, i.e if the element is visible, if the element has a particular text etc. Use `expect` from `@playwright/test` and if not use `expect.poll` to wait for the assertion to pass. |

||||

|

||||

## Writing a test |

||||

|

||||

Let's write a test for testing filter functionality. |

||||

|

||||

For simplicity, we will have `DashboardPage` implemented, which will have all the methods related to dashboard page and also its child components like Grid, etc. |

||||

|

||||

### Create a test suite |

||||

|

||||

Create a new file `filter.spec.ts` in `tests/playwright/tests` folder and use `setup` method to create a new project and user. |

||||

|

||||

```js |

||||

import { test, expect } from '@playwright/test'; |

||||

import setup, { NcContext } from '../setup'; |

||||

|

||||

test.describe('Filter', () => { |

||||

let context: NcContext; |

||||

|

||||

test.beforeEach(async ({ page }) => { |

||||

context = await setup({ page }); |

||||

}) |

||||

|

||||

test('should filter', async ({ page }) => { |

||||

// ... |

||||

}); |

||||

}); |

||||

``` |

||||

|

||||

### Create a page object |

||||

|

||||

Since filter is UI wise scoped to a `Toolbar` , we will add filter page object to `ToolbarPage` page object. |

||||

|

||||

```js |

||||

export class ToolbarPage extends BasePage { |

||||

readonly parent: GridPage | GalleryPage | FormPage | KanbanPage; |

||||

readonly filter: ToolbarFilterPage; |

||||

|

||||

constructor(parent: GridPage | GalleryPage | FormPage | KanbanPage) { |

||||

super(parent.rootPage); |

||||

this.parent = parent; |

||||

this.filter = new ToolbarFilterPage(this); |

||||

} |

||||

} |

||||

``` |

||||

|

||||

We will create `ToolbarFilterPage` page object, which will have all the methods related to filter. |

||||

|

||||

```js |

||||

export class ToolbarFilterPage extends BasePage { |

||||

readonly toolbar: ToolbarPage; |

||||

|

||||

constructor(toolbar: ToolbarPage) { |

||||

super(toolbar.rootPage); |

||||

this.toolbar = toolbar; |

||||

} |

||||

} |

||||

``` |

||||

|

||||

Here `BasePage` is an abstract class, which used to enforce structure for all page objects. Thus all page object *should* inherit `BasePage`. |

||||

|

||||

- Helper methods like `waitForResponse` and `getClipboardText` (this can be access on any page object, with `this.waitForResponse`) |

||||

- Provides structure for page objects, enforces all Page objects to have `rootPage` property, which is the page object created in the test setup. |

||||

- Enforces all pages to have a `get` method which will return the locator of the main container of that page, hence we can have focused dom selection, i.e. |

||||

|

||||

```js |

||||

// This will only select the button inside the container of the concerned page |

||||

await this.get().querySelector('button').count(); |

||||

``` |

||||

|

||||

### Writing an action method |

||||

|

||||

This a method which will reset/clear all the filters. Since this is an action method, it will also wait for the `delete` filter API to return. Ignoring this API call will cause flakiness in the test, down the line. |

||||

|

||||

```js |

||||

async resetFilter() { |

||||

await this.waitForResponse({ |

||||

uiAction: async () => await this.get().locator('.nc-filter-item-remove-btn').click(), |

||||

httpMethodsToMatch: ['DELETE'], |

||||

requestUrlPathToMatch: '/api/v1/db/meta/filters/', |

||||

}); |

||||

} |

||||

``` |

||||

|

||||

### Writing an assertion/verification method |

||||

|

||||

Here we use `expect` from `@playwright/test` library, which has retry logic built in. |

||||

|

||||

```js |

||||

import { expect } from '@playwright/test'; |

||||

|

||||

async verifyFilter({ title }: { title: string }) { |

||||

await expect( |

||||

this.get().locator(`[data-testid="nc-fields-menu-${title}"]`).locator('input[type="checkbox"]') |

||||

).toBeChecked(); |

||||

} |

||||

``` |

||||

|

||||

## Tips to avoid flakiness |

||||

|

||||

- If an UI action, causes an API call or the UI state change, then wait for that API call to complete or the UI state to change. |

||||

- What to wait out can be situation specific, but in general, is best to wait for the final state to be reached, i.e. in the case of creating filter, while it seems like waiting for the filter API to complete is enough, but after its return the table rows are reloaded and the UI state changes, so its better to wait for the table rows to be reloaded. |

||||

|

||||

|

||||

## Accessing playwright report in the CI |

||||

|

||||

- Open `Summary` tab in the CI workflow in github actions. |

||||

- Scroll down to `Artifacts` section. |

||||

- Access reports which suffixed with the db type and shard number(corresponding to the CI workerflow name). i.e `playwright-report-mysql-2` is for `playwright-mysql-2` workflow. |

||||

- Download it and run `pnpm install -D @playwright/test && npx playwright show-report ./` inside the downloaded folder. |

||||

@ -0,0 +1,164 @@

|

||||

--- |

||||

title: "Releases & Builds" |

||||

description: "NocoDB creates Docker and Binaries for each PR" |

||||

--- |

||||

## Builds of NocoDB |

||||

|

||||

There are 3 kinds of docker builds in NocoDB |

||||

|

||||

- Release builds [nocodb/nocodb](https://hub.docker.com/r/nocodb/nocodb) : built during NocoDB release. |

||||

- Daily builds [nocodb/nocodb-daily](https://hub.docker.com/r/nocodb/nocodb-daily) : built every 6 hours from Develop branch. |

||||

- Timely builds [nocodb/nocodb-timely](https://hub.docker.com/r/nocodb/nocodb-timely): built for every PR and manually triggered PRs. |

||||

|

||||

Below is an overview of how to make these builds and what happens behind the scenes. |

||||

|

||||

## Release builds |

||||

|

||||

### How to make a release build ? |

||||

|

||||

- Click [NocoDB release action](https://github.com/nocodb/nocodb/actions/workflows/release-nocodb.yml) |

||||

- You should see the below screen |

||||

|

||||

|

||||

- Change `Use workflow from` to `Branch: master`. If you choose the wrong branch, the workflow will be ended. |

||||

|

||||

|

||||

- Then there would be two cases - you can either leave target tag and pervious tag blank or manually input some values |

||||

|

||||

- Target Tag means the target deployment version, while Previous Tag means the latest version as of now. Previous Tag is used for Release Note only - showing the file / commit differences between two tags. |

||||

|

||||

### Tagging |

||||

|

||||

The naming convention would be following given the actual release tag is `0.100.0` |

||||

|

||||

- `0.100.0-beta.0` (first version of pre-release) |

||||

- `0.100.0-beta.1` (include bug fix changes on top of the previous version) |

||||

- `0.100.0-beta.2`(include bug fix changes on top of the previous version) |

||||

- and so on ... |

||||

- `0.100.0` (actual release) |

||||

- `0.100.1` (minor bug fix release) |

||||

- `0.100.2` (minor bug fix release) |

||||

|

||||

### Case 1: Leaving inputs blank |

||||

|

||||

- If Previous Tag is blank, then the value will be fetched from [latest](https://github.com/nocodb/nocodb/releases/latest) |

||||

- If Target Tag is blank, then the value will be Previous Tag plus one. Example: 0.90.11 (Previous Tag) + 0.0.1 = 0.90.12 (Target Tag) |

||||

|

||||

### Case 2: Manually Input |

||||

|

||||

Why? Sometimes we may mess up in NPM deployment. As NPM doesn't allow us to redeploy to the same tag again, in this case we cannot just use the previous tag + 1. Therefore, we need to use previous tag + 2 instead and we manually configure it. |

||||

|

||||

- After that, click `Run workflow` to start |

||||

- You can see Summary for the overall job status. |

||||

- Once `release-draft-note` and `release-executables` is finished, then go to [releases](https://github.com/nocodb/nocodb/releases), edit the draft note and save as draft for time being. |

||||

- Example: Adding header, update content if necessary, and click `Auto-generate release notes` to include more info. |

||||

- Once `release-docker` is finished, then test it locally first. You'll be expected to see `Upgrade Available` notification in UI as we haven't published the release note. (the version is retrieved from there) |

||||

- Once everything is finished, then publish the release note and the deployment is considered as DONE. |

||||

|

||||

### How does release action work ? |

||||

|

||||

#### validate-branch |

||||

|

||||

To check if `github.ref` is master. Otherwise, other branches will be not accepted. |

||||

|

||||

#### process-input |

||||

|

||||

To enrich target tag or previous tag if necessary. |

||||

|

||||

#### pr-to-master |

||||

|

||||

Automate a PR from develop to master branch so that we know the actual differences between the previous tag and the current tag. We choose master branch for a deployment base. |

||||

|

||||

#### release-npm |

||||

|

||||

Build frontend and backend and release them to NPM. The changes during built such as version bumping will be committed and pushed to a temporary branch and an automated PR will be created and merged to master branch. |

||||

|

||||

Note that once you publish with a certain tag, you cannot publish with the same tag again. |

||||

|

||||

#### release-draft-note |

||||

|

||||

Generate a draft release note. Some actions need to be done after this step. |

||||

|

||||

#### release-docker |

||||

|

||||

Build docker image and publish it to Docker Hub. It may take around 15 - 30 mins. |

||||

|

||||

#### close-issues |

||||

|

||||

Open issues marked with label `Status: Fixed` and `Status: Resolved` will be closed by bot automatically with comment mentioning the fix is included in which version. |

||||

|

||||

Example: |

||||

|

||||

|

||||

|

||||

#### publish-docs |

||||

|

||||

Publish Documentations |

||||

|

||||

#### update-sdk-path |

||||

|

||||

`nocodb-sdk` is used in frontend and backend. However, in develop branch, the value would be `file:../nocodb-sdk` for development purpose (so that the changes done in nocodb-sdk in develop will be included in frontend and backend). During the deployment, the value will be changed to the target tag. This job is to update them back. |

||||

|

||||

#### sync-to-develop |

||||

|

||||

Once the deployment is finished, there would be some new changes being pushed to master branch. This job is to sync the changes back to develop so that both branch won't have any difference. |

||||

|

||||

## Daily builds |

||||

|

||||

### What are daily builds ? |

||||

- NocoDB creates every 6 hours from develop branches and publishes as nocodb/nocodb-daily |

||||

- This is so that we can easily try what is in the develop branch easily. |

||||

|

||||

### Docker images |

||||

- The docker images will be built and pushed to Docker Hub (See [nocodb/nocodb-daily](https://hub.docker.com/r/nocodb/nocodb-daily/tags) for the full list). |

||||

|

||||

## Timely builds |

||||

|

||||

### What are timely builds ? |

||||

NocoDB has github actions which creates docker and binaries for each PR! And these can be found as a **comment on the last commit** of the PR. |

||||

|

||||

Example shown below |

||||

- Go to a PR and click on the comment. |

||||

<img width="1111" alt="Screenshot 2023-01-23 at 15 46 36" src="https://user-images.githubusercontent.com/5435402/214083736-80062398-3712-430f-9865-86b110090c91.png" /> |

||||

|

||||

- Click on the link to copy the docker image and run it locally. |

||||

<img width="1231" alt="Screenshot 2023-01-23 at 15 46 55" src="https://user-images.githubusercontent.com/5435402/214083755-945d9485-2b9e-4739-8408-068bdf4a84b7.png" /> |

||||

|

||||

|

||||

This is to |

||||

- reduce pull request cycle time |

||||

- allow issue reporters / reviewers to verify the fix without setting up their local machines |

||||

|

||||

### Docker images |

||||

When a non-draft Pull Request is created, reopened or synchronized, a timely build for Docker would be triggered for the changes only included in the following paths. |

||||

- `packages/nocodb-sdk/**` |

||||

- `packages/nc-gui/**` |

||||

- `packages/nc-plugin/**` |

||||

- `packages/nocodb/**` |

||||

|

||||

The docker images will be built and pushed to Docker Hub (See [nocodb/nocodb-timely](https://hub.docker.com/r/nocodb/nocodb-timely/tags) for the full list). Once the image is ready, Github bot will add a comment with the command in the pull request. The tag would be `<NOCODB_CURRENT_VERSION>-pr-<PR_NUMBER>-<YYYYMMDD>-<HHMM>`. |

||||

|

||||

|

||||

|

||||

## Executables or Binaries |

||||

|

||||

Similarly, we provide a timely build for executables for non-docker users. The source code will be built, packaged as binary files, and pushed to Github (See [nocodb/nocodb-timely](https://github.com/nocodb/nocodb-timely/releases) for the full list). |

||||

|

||||

Currently, we only support the following targets: |

||||

|

||||

- `node16-linux-arm64` |

||||

- `node16-macos-arm64` |

||||

- `node16-win-arm64` |

||||

- `node16-linux-x64` |

||||

- `node16-macos-x64` |

||||

- `node16-win-x64` |

||||

|

||||

Once the executables are ready, Github bot will add a comment with the commands in the pull request. |

||||

|

||||

|

||||

|

||||

NocoDB creates Docker and Binaries for each PR. |

||||

|

||||

This is to |

||||

- reduce pull request cycle time |

||||

- allow issue reporters / reviewers to verify the fix without setting up their local machines |

||||

@ -0,0 +1,78 @@

|

||||

--- |

||||

title: "i18n translation" |

||||

description: "Contribute to NocoDB's i18n translation" |

||||

--- |

||||

|

||||

- NocoDB supports 30+ foreign languages & community contributions are now simplified via [Crowdin](https://crowdin.com/). |

||||

|

||||

|

||||

## How to add / edit translations ? |

||||

|

||||

### Using Github |

||||

- For English, make changes directly to [en.json](https://github.com/nocodb/nocodb/blob/develop/packages/nc-gui/lang/en.json) & commit to `develop` |

||||

- For any other language, use `crowdin` option. |

||||

|

||||

|

||||

### Using Crowdin |

||||

|

||||

- Setup [Crowdin](https://crowdin.com) account |

||||

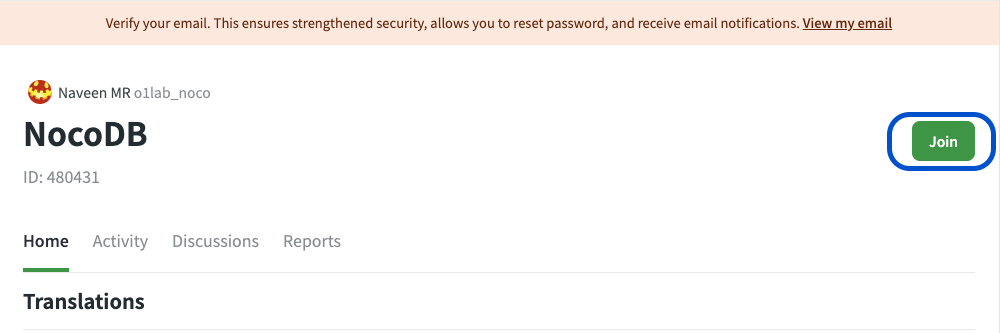

- Join [NocoDB](https://crowdin.com/project/nocodb) project |

||||

|

||||

|

||||

|

||||

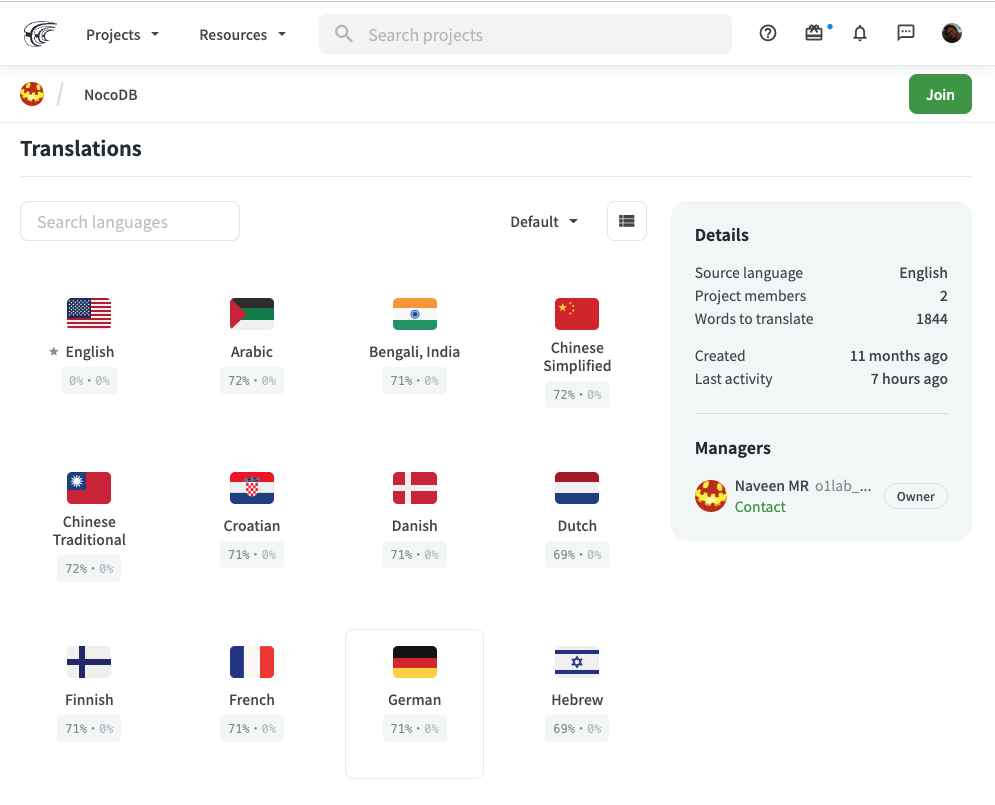

- Click the language that you wish to contribute |

||||

|

||||

|

||||

|

||||

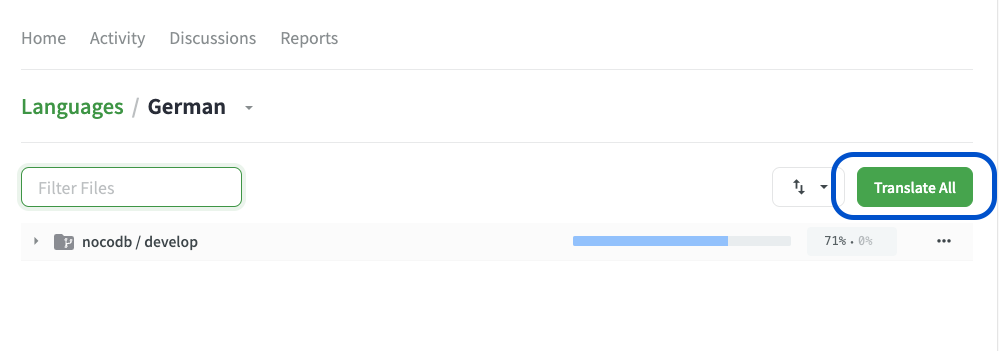

- Click the `Translate` button; this opens up `Crowdin Online Editor` |

||||

|

||||

|

||||

|

||||

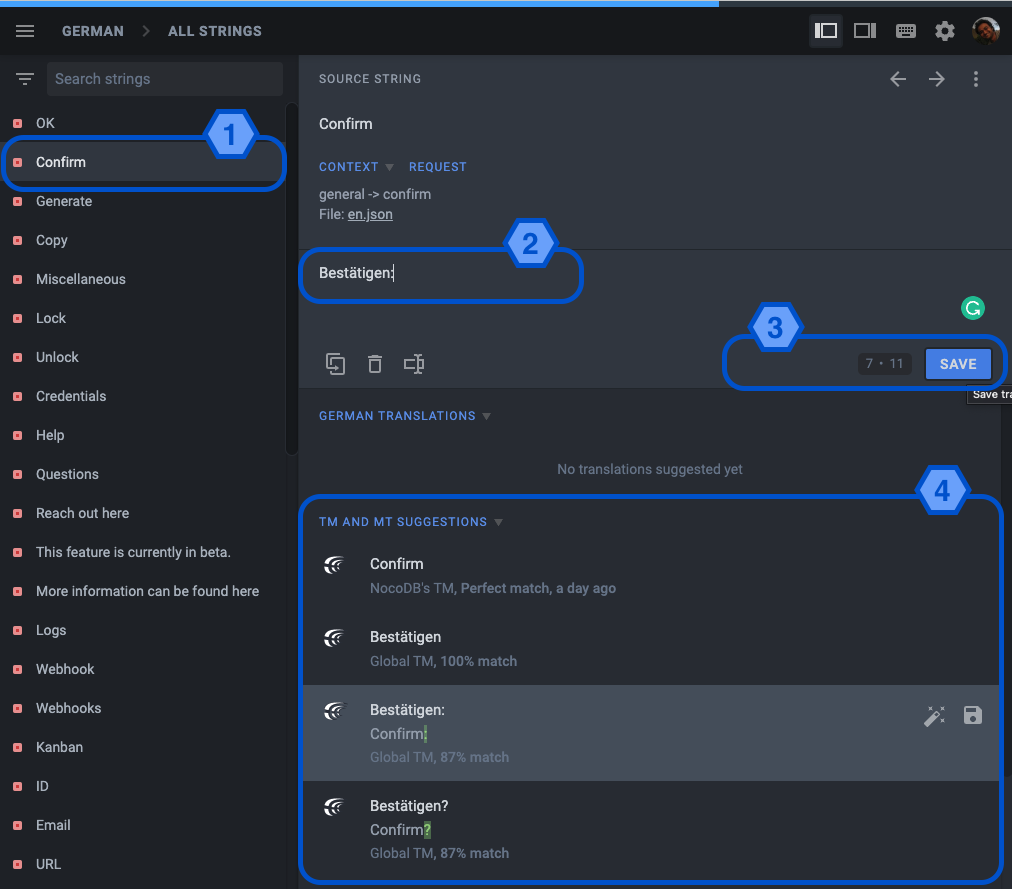

- Select string in `English` on the left-hand menu bar [1] |

||||

- Propose changes [2] |

||||

- Save [3] |

||||

Note: Crowdin provides translation recommendation's as in [4]. Click directly if it's apt |

||||

|

||||

|

||||

|

||||

A GitHub Pull Request will be automatically triggered (periodicity- 6 hours). We will follow up on remaining integration work items. |

||||

|

||||

#### Reference |

||||

|

||||

Refer following articles to get additional details about Crowdin Portal usage |

||||

- [Translator Introduction](https://support.crowdin.com/crowdin-intro/) |

||||

- [Volunteer Translation Introduction](https://support.crowdin.com/for-volunteer-translators/) |

||||

- [Online Editor](https://support.crowdin.com/online-editor/) |

||||

|

||||

|

||||

|

||||

## How to add a new language ? |

||||

#### GitHub changes |

||||

- Update enumeration in `enums.ts` [packages/nc-gui/lib/enums.ts] |

||||

- Map JSON path in `a.i18n.ts` [packages/nc-gui/plugins/a.i18n.ts] |

||||

#### Crowdin changes [admin only] |

||||

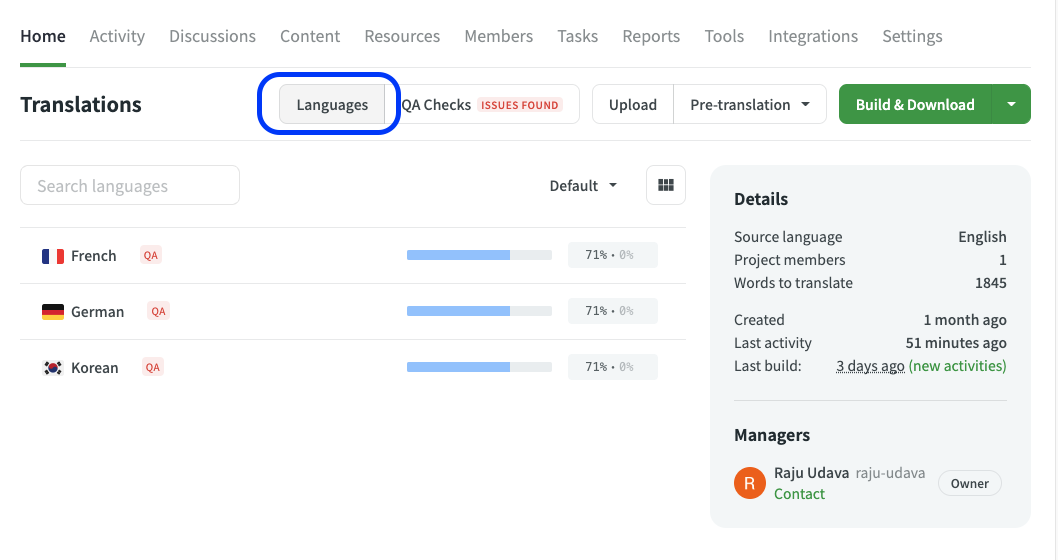

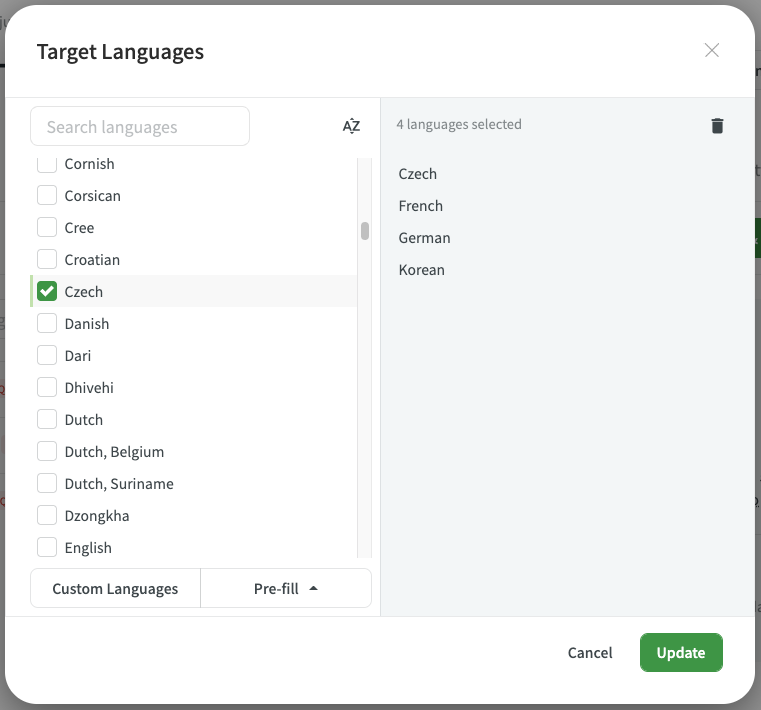

- Open `NocoDB` project |

||||

- Click on `Language` on the home tab |

||||

- Select target language, `Update` |

||||

- Update array in `tests/playwright/tests/language.spec.ts` |

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## String Categories |

||||

- **General**: simple & common tokens (save, cancel, submit, open, close, home, and such) |

||||

- **Objects**: objects from NocoDB POV (project, table, field, column, view, page, and such) |

||||

- **Title**: screen headers (compact) (menu headers, modal headers) |

||||

- **Lables**: text box/ radio/ field headers (few words) (Labels over textbox, radio buttons, and such) |

||||

- **Activity**/ actions: work items (few words) (Create Project, Delete Table, Add Row, and such) |

||||

- **Tooltip**: additional information associated with work items (usually lengthy) (Additional information provided for activity) |

||||

- **Placeholder**: placeholders associated with various textboxes (Text placeholders) |

||||

- **Msg** |

||||

- Info: general/success category for everything |

||||

- Error: warnings & errors |

||||

- Toast: pop-up toast messages |

||||

|

||||

> Note: string name should be in camelCase. Use above list as priority order in case of ambiguity. |

||||

@ -0,0 +1,5 @@

|

||||

{ |

||||

"label": "Engineering", |

||||

"collapsible": true, |

||||

"collapsed": true |

||||

} |

||||

Loading…

Reference in new issue