18 KiB

MLflow Node

Overview

MLflow is an excellent open source platform to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry.

MLflow task plugin used to execute MLflow tasks, Currently contains MLflow Projects and MLflow Models. (Model Registry will soon be rewarded for support)

- MLflow Projects: Package data science code in a format to reproduce runs on any platform.

- MLflow Models: Deploy machine learning models in diverse serving environments.

- Model Registry: Store, annotate, discover, and manage models in a central repository.

The MLflow plugin currently supports and will support the following:

- MLflow Projects

- BasicAlgorithm: contains LogisticRegression, svm, lightgbm, xgboost

- AutoML: AutoML tool, contains autosklean, flaml

- Custom projects: Support for running your own MLflow projects

- MLflow Models

- MLFLOW: Use

MLflow models serveto deploy a model service - Docker: Run the container after packaging the docker image

- MLFLOW: Use

Create Task

- Click

Project Management -> Project Name -> Workflow Definition, and click theCreate Workflowbutton to enter the DAG editing page. - Drag from the toolbar

task node to canvas.

task node to canvas.

Task Parameters and Example

- Please refer to DolphinScheduler Task Parameters Appendix

Default Task Parameterssection for default parameters.

| Parameter | Description |

|---|---|

| MLflow Tracking Server URI | MLflow Tracking Server URI, default http://localhost:5000. |

| Experiment Name | Create the experiment where the task is running, if the experiment does not exist. If the name is empty, it is set to Default, the same as MLflow. |

MLflow Projects

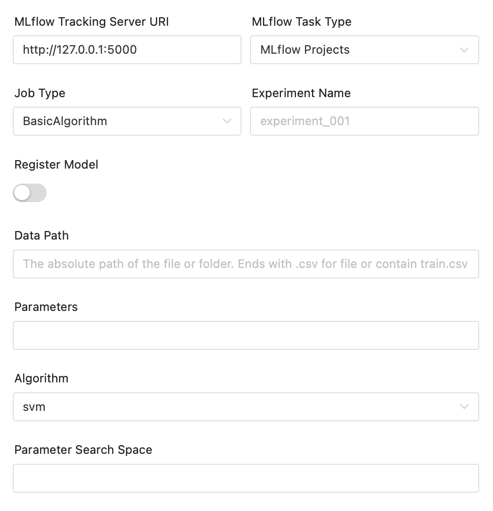

BasicAlgorithm

Task Parameters

| Parameter | Description |

|---|---|

| Register Model | Register the model or not. If register is selected, the following parameters are expanded. |

| Model Name | The registered model name is added to the original model version and registered as Production. |

| Data Path | The absolute path of the file or folder. Ends with .csv for file or contain train.csv and test.csv for folder(In the suggested way, users should build their own test sets for model evaluation. |

| Parameters | Parameter when initializing the algorithm/AutoML model, which can be empty. For example, parameters "time_budget=30;estimator_list=['lgbm']" for flaml. The convention will be passed with '; ' shards each parameter, using the name before the equal sign as the parameter name, and using the name after the equal sign to get the corresponding parameter value through python eval(). |

| Algorithm | The selected algorithm currently supports LR, SVM, LightGBM and XGboost based on scikit-learn form. |

| Parameter Search Space | Parameter search space when running the corresponding algorithm, which can be empty. For example, the parameter max_depth=[5, 10];n_estimators=[100, 200] for lightgbm. The convention will be passed with '; 'shards each parameter, using the name before the equal sign as the parameter name, and using the name after the equal sign to get the corresponding parameter value through python eval(). |

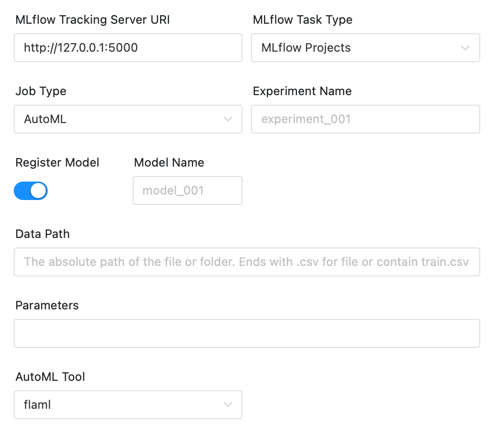

AutoML

Task Parameter

| Parameter | Description |

|---|---|

| Register Model | Register the model or not. If register is selected, the following parameters are expanded. |

| model name | The registered model name is added to the original model version and registered as Production. |

| Data Path | The absolute path of the file or folder. Ends with .csv for file or contain train.csv and test.csv for folder(In the suggested way, users should build their own test sets for model evaluation). |

| Parameters | Parameter when initializing the algorithm/AutoML model, which can be empty. For example, parameters n_estimators=200;learning_rate=0.2 for flaml. The convention will be passed with '; 'shards each parameter, using the name before the equal sign as the parameter name, and using the name after the equal sign to get the corresponding parameter value through python eval(). The detailed parameter list is as follows: |

| AutoML tool | The AutoML tool used, currently supports autosklearn and flaml. |

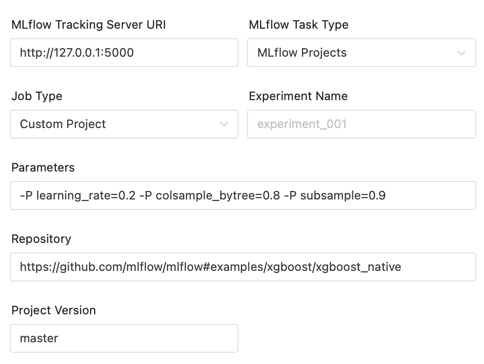

Custom projects

Task Parameter

| Parameter | Description |

|---|---|

| parameters | --param-list in mlflow run. For example -P learning_rate=0.2 -P colsample_bytree=0.8 -P subsample=0.9. |

| Repository | Repository url of MLflow Project, Support git address and directory on worker. If it's in a subdirectory, We add # to support this (same as mlflow run) , for example https://github.com/mlflow/mlflow#examples/xgboost/xgboost_native. |

| Project Version | Version of the project, default master. |

You can now use this feature to run all MLFlow projects on GitHub (For example MLflow examples ). You can also create your own machine learning library to reuse your work, and then use DolphinScheduler to use your library with one click.

MLflow Models

General Parameters

| Parameter | Description |

|---|---|

| Model-URI | Model-URI of MLflow , support models:/<model_name>/suffix format and runs:/ format. See https://mlflow.org/docs/latest/tracking.html#artifact-stores |

| Port | The port to listen on. |

MLflow

Docker

Environment to Prepare

Conda Environment

Please install anaconda or miniconda in advance.

Method A:

Config anaconda environment in /dolphinscheduler/conf/env/dolphinscheduler_env.sh.

Add the following content to the file:

# config anaconda environment

export PATH=/opt/anaconda3/bin:$PATH

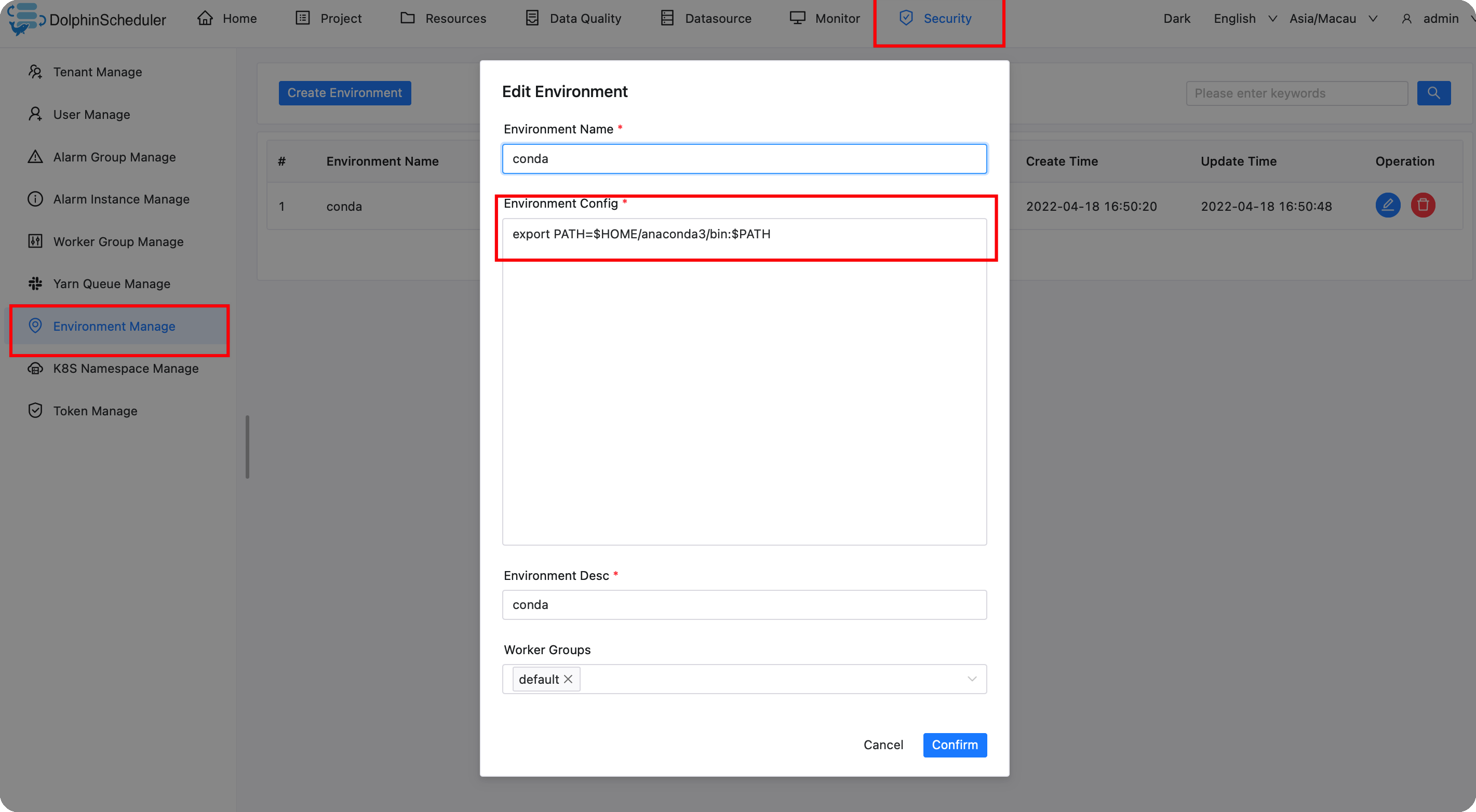

Method B:

You need to enter the admin account to configure a conda environment variable.

Note During the configuration task, select the conda environment created above. Otherwise, the program cannot find the Conda environment.

Start the MLflow Service

Make sure you have installed MLflow, using 'pip install mlflow'.

Create a folder where you want to save your experiments and models and start MLflow service.

mkdir mlflow

cd mlflow

mlflow server -h 0.0.0.0 -p 5000 --serve-artifacts --backend-store-uri sqlite:///mlflow.db

After running, an MLflow service is started.

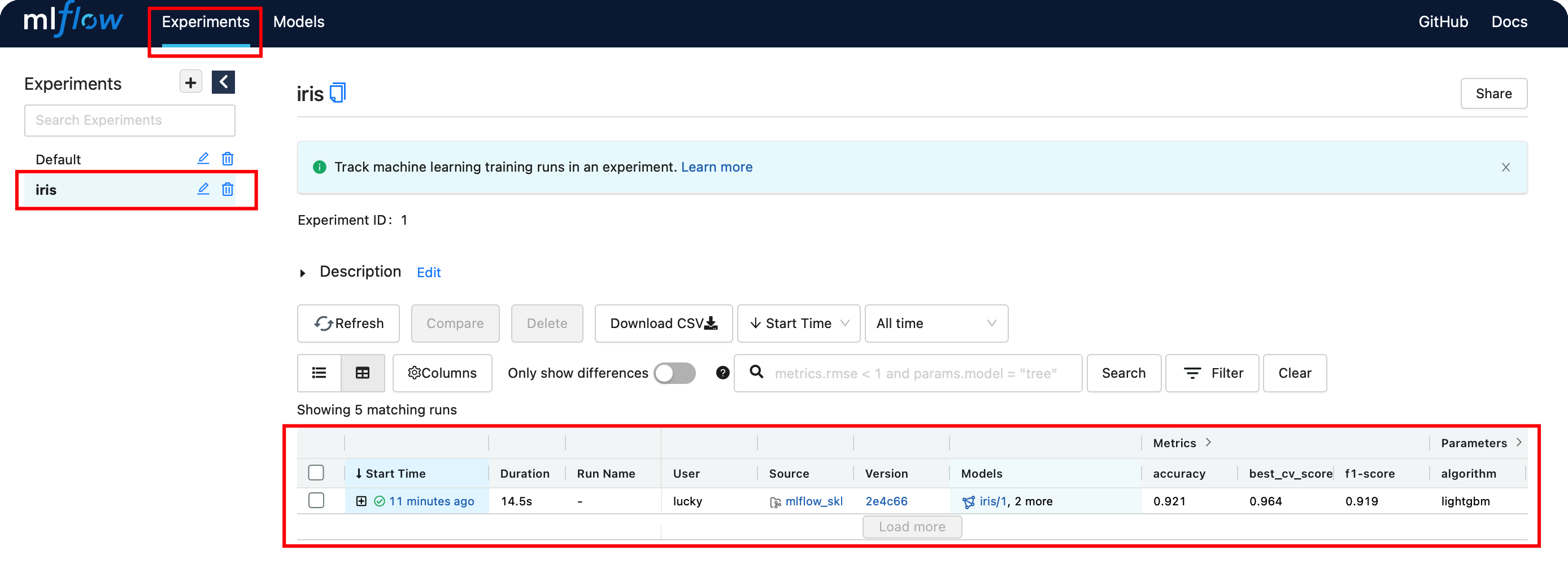

After this, you can visit the MLflow service (http://localhost:5000) page to view the experiments and models.

Preset Algorithm Repository Configuration

If you can't access github, you can modify the following fields in the commom.properties configuration file to replace the github address with an accessible address.

# mlflow task plugin preset repository

ml.mlflow.preset_repository=https://github.com/apache/dolphinscheduler-mlflow

# mlflow task plugin preset repository version

ml.mlflow.preset_repository_version="main"