@@ -93,4 +93,4 @@

\ No newline at end of file

+

diff --git a/escheduler-ui/src/js/conf/home/pages/monitor/pages/servers/statistics.vue b/escheduler-ui/src/js/conf/home/pages/monitor/pages/servers/statistics.vue

new file mode 100644

index 0000000000..a552e4cc00

--- /dev/null

+++ b/escheduler-ui/src/js/conf/home/pages/monitor/pages/servers/statistics.vue

@@ -0,0 +1,115 @@

+

+

+

+

diff --git a/escheduler-ui/src/js/conf/home/pages/monitor/pages/servers/worker.vue b/escheduler-ui/src/js/conf/home/pages/monitor/pages/servers/worker.vue

index 3cf0993415..960beeb14a 100644

--- a/escheduler-ui/src/js/conf/home/pages/monitor/pages/servers/worker.vue

+++ b/escheduler-ui/src/js/conf/home/pages/monitor/pages/servers/worker.vue

@@ -6,7 +6,7 @@

IP: {{item.host}}

- {{$t('Port')}}: {{item.port}}

+ {{$t('Process Pid')}}: {{item.port}}

{{$t('Zk registration directory')}}: {{item.zkDirectory}}

@@ -94,4 +94,4 @@

\ No newline at end of file

+

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/_source/instanceConditions/index.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/_source/instanceConditions/index.vue

index 33d17967c1..4388d477aa 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/_source/instanceConditions/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/_source/instanceConditions/index.vue

@@ -36,10 +36,10 @@

-

+

-

+

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/email.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/email.vue

index cc6cb57646..f5c38f9a12 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/email.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/email.vue

@@ -32,9 +32,12 @@

v-model="email"

:disabled="disabled"

:placeholder="$t('Please enter email')"

+ @blur="_emailEnter"

@keydown.tab="_emailTab"

@keyup.delete="_emailDelete"

@keyup.enter="_emailEnter"

+ @keyup.space="_emailEnter"

+ @keyup.186="_emailEnter"

@keyup.up="_emailKeyup('up')"

@keyup.down="_emailKeyup('down')">

@@ -78,6 +81,11 @@

* Manually add a mailbox

*/

_manualEmail () {

+ if (this.email === '') {

+ return

+ }

+ this.email = _.trim(this.email).replace(/(;$)|(;$)/g, "")

+

let email = this.email

let is = (n) => {

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/list.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/list.vue

index aa2be8ef8e..f9e8dba231 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/list.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/list.vue

@@ -58,12 +58,12 @@

-

-

-

-

-

-

-

+

+

+

+

+

+

+ :title="$t('delete')">

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/start.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/start.vue

index ddb6b0a156..c2e3c33728 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/start.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/start.vue

@@ -137,7 +137,7 @@

{{$t('Cancel')}}

- {{spinnerLoading ? 'Loading...' : $t('Start')}}

+ {{spinnerLoading ? 'Loading...' : $t('Start')}}

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/timing.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/timing.vue

index ba67536ea2..42bb7905a0 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/timing.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/timing.vue

@@ -21,9 +21,11 @@

+ 执行时间

{{$t('Timing')}}

+

@@ -43,6 +45,13 @@

{{$t('Failure Strategy')}}

@@ -127,7 +136,7 @@

{{$t('Cancel')}}

- {{spinnerLoading ? 'Loading...' : (item.crontab ? $t('Edit') : $t('Create'))}}

+ {{spinnerLoading ? 'Loading...' : (item.crontab ? $t('Edit') : $t('Create'))}}

@@ -162,7 +171,8 @@

receiversCc: [],

i18n: i18n.globalScope.LOCALE,

processInstancePriority: 'MEDIUM',

- workerGroupId: -1

+ workerGroupId: -1,

+ previewTimes: []

}

},

props: {

@@ -180,6 +190,11 @@

return false

}

+ if (this.scheduleTime[0] === this.scheduleTime[1]) {

+ this.$message.warning(`${i18n.$t('The start time must not be the same as the end')}`)

+ return false

+ }

+

if (!this.crontab) {

this.$message.warning(`${i18n.$t('Please enter crontab')}`)

return false

@@ -225,6 +240,24 @@

}

},

+ _preview () {

+ if (this._verification()) {

+ let api = 'dag/previewSchedule'

+ let searchParams = {

+ schedule: JSON.stringify({

+ startTime: this.scheduleTime[0],

+ endTime: this.scheduleTime[1],

+ crontab: this.crontab

+ })

+ }

+ let msg = ''

+

+ this.store.dispatch(api, searchParams).then(res => {

+ this.previewTimes = res

+ })

+ }

+ },

+

_getNotifyGroupList () {

return new Promise((resolve, reject) => {

let notifyGroupListS = _.cloneDeep(this.store.state.dag.notifyGroupListS) || []

@@ -248,6 +281,9 @@

},

close () {

this.$emit('close')

+ },

+ preview () {

+ this._preview()

}

},

watch: {

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/util.js b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/util.js

index db6c8aa261..2259dea9cd 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/util.js

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/util.js

@@ -37,7 +37,7 @@ let warningTypeList = [

]

const isEmial = (val) => {

- let regEmail = /^([a-zA-Z0-9]+[_|\_|\.]?)*[a-zA-Z0-9]+@([a-zA-Z0-9]+[_|\_|\.]?)*[a-zA-Z0-9]+\.[a-zA-Z]{2,3}$/ // eslint-disable-line

+ let regEmail = /^([a-zA-Z0-9]+[_|\-|\.]?)*[a-zA-Z0-9]+@([a-zA-Z0-9]+[_|\-|\.]?)*[a-zA-Z0-9]+\.[a-zA-Z]{2,3}$/ // eslint-disable-line

return regEmail.test(val)

}

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/index.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/index.vue

index bc63896c17..bf8612dd98 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/index.vue

@@ -3,7 +3,7 @@

- {{$t('Create process')}}

+ {{$t('Create process')}}

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/index/index.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/index/index.vue

index 28f61f9e65..f695f3a678 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/index/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/index/index.vue

@@ -33,30 +33,6 @@

-

-

-

-

-

- {{$t('Queue statistics')}}

-

-

-

-

-

-

-

-

-

- {{$t('Command status statistics')}}

-

-

-

-

-

-

-

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/instance/pages/list/_source/list.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/instance/pages/list/_source/list.vue

index f98383c558..619407a61a 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/instance/pages/list/_source/list.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/instance/pages/list/_source/list.vue

@@ -73,7 +73,6 @@

data-toggle="tooltip"

:title="$t('Edit')"

@click="_reEdit(item)"

- v-ps="['GENERAL_USER']"

icon="iconfont icon-bianjixiugai"

:disabled="item.state !== 'SUCCESS' && item.state !== 'PAUSE' && item.state !== 'FAILURE' && item.state !== 'STOP'">

+ :title="item.state === 'STOP' ? $t('Recovery Suspend') : $t('Stop')"

+ @click="_stop(item,$index)"

+ :icon="item.state === 'STOP' ? 'iconfont icon-ai06' : 'iconfont icon-zanting'"

+ :disabled="item.state !== 'RUNNING_EXEUTION' && item.state != 'STOP'">

+ :title="$t('delete')">

@@ -161,7 +155,7 @@

shape="circle"

size="xsmall"

disabled="true">

- {{item.count}}s

+ {{item.count}}

- {{item.count}}s

+ {{item.count}}

-

-

+

+

+

+

+

+

+

- {{item.count}}s

+ {{item.count}}

+

+

+

@@ -368,11 +371,20 @@

* stop

* @param STOP

*/

- _stop (item) {

- this._upExecutorsState({

- processInstanceId: item.id,

- executeType: 'STOP'

- })

+ _stop (item, index) {

+ if(item.state == 'STOP') {

+ this._countDownFn({

+ id: item.id,

+ executeType: 'RECOVER_SUSPENDED_PROCESS',

+ index: index,

+ buttonType: 'suspend'

+ })

+ } else {

+ this._upExecutorsState({

+ processInstanceId: item.id,

+ executeType: 'STOP'

+ })

+ }

},

/**

* pause

@@ -389,7 +401,7 @@

} else {

this._upExecutorsState({

processInstanceId: item.id,

- executeType: item.state === 'PAUSE' ? 'RECOVER_SUSPENDED_PROCESS' : 'PAUSE'

+ executeType: 'PAUSE'

})

}

},

@@ -441,7 +453,7 @@

if (data.length) {

_.map(data, v => {

v.disabled = true

- v.count = 10

+ v.count = 9

})

}

return data

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/list/_source/list.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/list/_source/list.vue

index 087b50032a..21a58fc1ea 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/list/_source/list.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/list/_source/list.vue

@@ -63,8 +63,7 @@

data-toggle="tooltip"

:title="$t('Edit')"

@click="_edit(item)"

- icon="iconfont icon-bianjixiugai"

- v-ps="['GENERAL_USER']">

+ icon="iconfont icon-bianjixiugai">

+ icon="iconfont icon-shanchu">

diff --git a/escheduler-ui/src/js/conf/home/pages/projects/pages/list/index.vue b/escheduler-ui/src/js/conf/home/pages/projects/pages/list/index.vue

index 6031b590e0..7b2f555192 100644

--- a/escheduler-ui/src/js/conf/home/pages/projects/pages/list/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/projects/pages/list/index.vue

@@ -3,7 +3,7 @@

- {{$t('Create Project')}}

+ {{$t('Create Project')}}

@@ -113,4 +113,4 @@

},

components: { mListConstruction, mSpin, mConditions, mList, mCreateProject, mNoData }

}

-

\ No newline at end of file

+

diff --git a/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/create/index.vue b/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/create/index.vue

index d08ed7f8dd..bf3ebe044d 100644

--- a/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/create/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/create/index.vue

@@ -49,7 +49,7 @@

- {{spinnerLoading ? 'Loading...' : $t('Create')}}

+ {{spinnerLoading ? 'Loading...' : $t('Create')}}

{{$t('Cancel')}}

diff --git a/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/_source/list.vue b/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/_source/list.vue

index 40c03ff7a0..89acc74dd6 100644

--- a/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/_source/list.vue

+++ b/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/_source/list.vue

@@ -51,8 +51,7 @@

:title="$t('Edit')"

:disabled="_rtDisb(item)"

@click="_edit(item,$index)"

- icon="iconfont icon-bianjixiugai"

- v-ps="['GENERAL_USER']">

+ icon="iconfont icon-bianjixiugai">

+ @click="_rename(item,$index)">

+ icon="iconfont icon-download">

+ :title="$t('delete')">

@@ -210,4 +206,4 @@

},

components: { }

}

-

\ No newline at end of file

+

diff --git a/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/index.vue b/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/index.vue

index 6555d7a6d4..7434772899 100644

--- a/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/resource/pages/file/pages/list/index.vue

@@ -4,8 +4,8 @@

- {{$t('Create File')}}

- {{$t('Upload Files')}}

+ {{$t('Create File')}}

+ {{$t('Upload Files')}}

@@ -98,4 +98,4 @@

},

components: { mListConstruction, mConditions, mList, mSpin, mNoData }

}

-

\ No newline at end of file

+

diff --git a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/_source/list.vue b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/_source/list.vue

index 8d96ede359..ad0c510c87 100644

--- a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/_source/list.vue

+++ b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/_source/list.vue

@@ -1,4 +1,4 @@

-

+v-ps

@@ -70,8 +70,7 @@

data-toggle="tooltip"

:title="$t('Edit')"

@click="_edit(item)"

- icon="iconfont icon-bianjixiugai"

- v-ps="['GENERAL_USER']">

+ icon="iconfont icon-bianjixiugai">

+ :title="$t('delete')">

diff --git a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/index.vue b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/index.vue

index 84a79be603..9ce2373292 100644

--- a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/function/index.vue

@@ -3,7 +3,7 @@

- {{$t('Create UDF Function')}}

+ {{$t('Create UDF Function')}}

diff --git a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/_source/list.vue b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/_source/list.vue

index 672f8b5226..4077f13bc0 100644

--- a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/_source/list.vue

+++ b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/_source/list.vue

@@ -58,8 +58,7 @@

icon="iconfont icon-wendangxiugai"

data-toggle="tooltip"

:title="$t('Rename')"

- @click="_rename(item,$index)"

- v-ps="['GENERAL_USER']">

+ @click="_rename(item,$index)">

+ @click="_downloadFile(item)">

+ icon="iconfont icon-shanchu">

diff --git a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/index.vue b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/index.vue

index ed9a81a705..228501dbed 100644

--- a/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/index.vue

+++ b/escheduler-ui/src/js/conf/home/pages/resource/pages/udf/pages/resource/index.vue

@@ -3,7 +3,7 @@

- {{$t('Upload UDF Resources')}}

+ {{$t('Upload UDF Resources')}}

diff --git a/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/createUser.vue b/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/createUser.vue

index 9d3ab042d8..378f410d38 100644

--- a/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/createUser.vue

+++ b/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/createUser.vue

@@ -98,7 +98,9 @@

userName: '',

userPassword: '',

tenantId: {},

- queueName: {},

+ queueName: {

+ id:''

+ },

email: '',

phone: '',

tenantList: [],

@@ -129,7 +131,8 @@

}

},

_verification () {

- let regEmail = /^([a-zA-Z0-9]+[_|\_|\.]?)*[a-zA-Z0-9]+@([a-zA-Z0-9]+[_|\_|\.]?)*[a-zA-Z0-9]+\.[a-zA-Z]{2,3}$/ // eslint-disable-line

+ let regEmail = /^([a-zA-Z0-9]+[_|\-|\.]?)*[a-zA-Z0-9]+@([a-zA-Z0-9]+[_|\-|\.]?)*[a-zA-Z0-9]+\.[a-zA-Z]{2,}$/ // eslint-disable-line

+

// Mobile phone number regular

let regPhone = /^1(3|4|5|6|7|8)\d{9}$/; // eslint-disable-line

@@ -182,7 +185,10 @@

_getTenantList () {

return new Promise((resolve, reject) => {

this.store.dispatch('security/getTenantList').then(res => {

- this.tenantList = _.map(res, v => {

+ let arr = _.filter(res, (o) => {

+ return o.id !== -1

+ })

+ this.tenantList = _.map(arr, v => {

return {

id: v.id,

code: v.tenantName

@@ -197,6 +203,7 @@

},

_submit () {

this.$refs['popup'].spinnerLoading = true

+ console.log(this.tenantId.id)

let param = {

userName: this.userName,

userPassword: this.userPassword,

@@ -205,9 +212,11 @@

queue: this.queueName.code,

phone: this.phone

}

+

if (this.item) {

param.id = this.item.id

}

+

this.store.dispatch(`security/${this.item ? 'updateUser' : 'createUser'}`, param).then(res => {

setTimeout(() => {

this.$refs['popup'].spinnerLoading = false

@@ -232,7 +241,7 @@

this.phone = this.item.phone

this.tenantId = _.find(this.tenantList, ['id', this.item.tenantId])

this.$nextTick(() => {

- this.queueName = _.find(this.queueList, ['code', this.item.queue])

+ this.queueName = _.find(this.queueList, ['code', this.item.queue])||{id:''}

})

}

})

@@ -243,7 +252,7 @@

this.email = this.item.email

this.phone = this.item.phone

this.tenantId.id = this.item.tenantId

- this.queueName = { queue: this.item.queue }

+ this.queueName = { queue: this.item.queue}

}

}

},

diff --git a/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/list.vue b/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/list.vue

index 125a3dfd93..e97886a61b 100644

--- a/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/list.vue

+++ b/escheduler-ui/src/js/conf/home/pages/security/pages/users/_source/list.vue

@@ -9,6 +9,9 @@

|

{{$t('User Name')}}

|

+

+ 用户类型

+ |

{{$t('Tenant')}}

|

@@ -21,6 +24,7 @@

{{$t('Phone')}}

|

+

{{$t('Create Time')}}

|

@@ -40,6 +44,9 @@

{{item.userName || '-'}}

+

+ {{item.userType === 'GENERAL_USER' ? `${$t('Ordinary users')}` : `${$t('Administrator')}`}}

+ |

{{item.tenantName || '-'}} |

{{item.queue || '-'}} |

@@ -62,7 +69,7 @@

{{$t('UDF Function')}}

-

+

@@ -84,6 +91,7 @@

size="xsmall"

data-toggle="tooltip"

:title="$t('delete')"

+ :disabled="item.userType === 'ADMIN_USER'"

icon="iconfont icon-shanchu">

diff --git a/escheduler-ui/src/js/conf/home/router/index.js b/escheduler-ui/src/js/conf/home/router/index.js

index 97a7e81a10..c1aa86d6ec 100644

--- a/escheduler-ui/src/js/conf/home/router/index.js

+++ b/escheduler-ui/src/js/conf/home/router/index.js

@@ -439,6 +439,14 @@ const router = new Router({

meta: {

title: `Mysql`

}

+ },

+ {

+ path: '/monitor/servers/statistics',

+ name: 'statistics',

+ component: resolve => require(['../pages/monitor/pages/servers/statistics'], resolve),

+ meta: {

+ title: `statistics`

+ }

}

]

}

diff --git a/escheduler-ui/src/js/conf/home/store/dag/actions.js b/escheduler-ui/src/js/conf/home/store/dag/actions.js

index e41e4be760..c93505eead 100644

--- a/escheduler-ui/src/js/conf/home/store/dag/actions.js

+++ b/escheduler-ui/src/js/conf/home/store/dag/actions.js

@@ -115,6 +115,7 @@ export default {

// timeout

state.timeout = processDefinitionJson.timeout

+ state.tenantId = processDefinitionJson.tenantId

resolve(res.data)

}).catch(res => {

reject(res)

@@ -146,6 +147,12 @@ export default {

// timeout

state.timeout = processInstanceJson.timeout

+ state.tenantId = processInstanceJson.tenantId

+

+ //startup parameters

+ state.startup = _.assign(state.startup, _.pick(res.data, ['commandType', 'failureStrategy', 'processInstancePriority', 'workerGroupId', 'warningType', 'warningGroupId', 'receivers', 'receiversCc']))

+ state.startup.commandParam = JSON.parse(res.data.commandParam)

+

resolve(res.data)

}).catch(res => {

reject(res)

@@ -160,6 +167,7 @@ export default {

let data = {

globalParams: state.globalParams,

tasks: state.tasks,

+ tenantId: state.tenantId,

timeout: state.timeout

}

io.post(`projects/${state.projectName}/process/save`, {

@@ -183,6 +191,7 @@ export default {

let data = {

globalParams: state.globalParams,

tasks: state.tasks,

+ tenantId: state.tenantId,

timeout: state.timeout

}

io.post(`projects/${state.projectName}/process/update`, {

@@ -207,6 +216,7 @@ export default {

let data = {

globalParams: state.globalParams,

tasks: state.tasks,

+ tenantId: state.tenantId,

timeout: state.timeout

}

io.post(`projects/${state.projectName}/instance/update`, {

@@ -377,6 +387,19 @@ export default {

})

})

},

+ /**

+ * Preview timing

+ */

+ previewSchedule ({ state }, payload) {

+ return new Promise((resolve, reject) => {

+ io.post(`projects/${state.projectName}/schedule/preview`, payload, res => {

+ resolve(res.data)

+ //alert(res.data)

+ }).catch(e => {

+ reject(e)

+ })

+ })

+ },

/**

* Timing list paging

*/

diff --git a/escheduler-ui/src/js/conf/home/store/dag/mutations.js b/escheduler-ui/src/js/conf/home/store/dag/mutations.js

index 15f87fab30..d3386bc76a 100644

--- a/escheduler-ui/src/js/conf/home/store/dag/mutations.js

+++ b/escheduler-ui/src/js/conf/home/store/dag/mutations.js

@@ -58,6 +58,12 @@ export default {

setTimeout (state, payload) {

state.timeout = payload

},

+ /**

+ * set tenantId

+ */

+ setTenantId (state, payload) {

+ state.tenantId = payload

+ },

/**

* set global params

*/

@@ -100,6 +106,7 @@ export default {

state.name = payload && payload.name || ''

state.desc = payload && payload.desc || ''

state.timeout = payload && payload.timeout || 0

+ state.tenantId = payload && payload.tenantId || -1

state.processListS = payload && payload.processListS || []

state.resourcesListS = payload && payload.resourcesListS || []

state.isDetails = payload && payload.isDetails || false

diff --git a/escheduler-ui/src/js/conf/home/store/dag/state.js b/escheduler-ui/src/js/conf/home/store/dag/state.js

index 9679893e60..c875500f5f 100644

--- a/escheduler-ui/src/js/conf/home/store/dag/state.js

+++ b/escheduler-ui/src/js/conf/home/store/dag/state.js

@@ -31,6 +31,8 @@ export default {

tasks: [],

// Timeout alarm

timeout: 0,

+ // tenant id

+ tenantId:-1,

// Node location information

locations: {},

// Node-to-node connection

@@ -90,5 +92,8 @@ export default {

// Process instance list{ view a single record }

instanceListS: [],

// Operating state

- isDetails: false

+ isDetails: false,

+ startup: {

+

+ }

}

diff --git a/escheduler-ui/src/js/conf/home/store/datasource/actions.js b/escheduler-ui/src/js/conf/home/store/datasource/actions.js

index 3e409ccb8b..c54a37f706 100644

--- a/escheduler-ui/src/js/conf/home/store/datasource/actions.js

+++ b/escheduler-ui/src/js/conf/home/store/datasource/actions.js

@@ -116,5 +116,14 @@ export default {

reject(e)

})

})

+ },

+ getKerberosStartupState ({ state }, payload) {

+ return new Promise((resolve, reject) => {

+ io.get(`datasources/kerberos-startup-state`, payload, res => {

+ resolve(res.data)

+ }).catch(e => {

+ reject(e)

+ })

+ })

}

}

diff --git a/escheduler-ui/src/js/conf/home/store/security/actions.js b/escheduler-ui/src/js/conf/home/store/security/actions.js

index 9fda7663ab..ff96adccf9 100644

--- a/escheduler-ui/src/js/conf/home/store/security/actions.js

+++ b/escheduler-ui/src/js/conf/home/store/security/actions.js

@@ -240,7 +240,13 @@ export default {

getTenantList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`tenant/list`, payload, res => {

- resolve(res.data)

+ let list=res.data

+ list.unshift({

+ id: -1,

+ tenantName: 'Default'

+ })

+ state.tenantAllList = list

+ resolve(list)

}).catch(e => {

reject(e)

})

diff --git a/escheduler-ui/src/js/conf/home/store/security/state.js b/escheduler-ui/src/js/conf/home/store/security/state.js

index be52d7838c..cbb67a1823 100644

--- a/escheduler-ui/src/js/conf/home/store/security/state.js

+++ b/escheduler-ui/src/js/conf/home/store/security/state.js

@@ -15,5 +15,6 @@

* limitations under the License.

*/

export default {

- workerGroupsListAll: []

+ workerGroupsListAll: [],

+ tenantAllList : []

}

diff --git a/escheduler-ui/src/js/module/components/secondaryMenu/_source/menu.js b/escheduler-ui/src/js/module/components/secondaryMenu/_source/menu.js

index 20182ce0b1..1c0aefbec7 100644

--- a/escheduler-ui/src/js/module/components/secondaryMenu/_source/menu.js

+++ b/escheduler-ui/src/js/module/components/secondaryMenu/_source/menu.js

@@ -193,7 +193,7 @@ let menu = {

monitor: [

{

name: `${i18n.$t('Servers manage')}`,

- id: 0,

+ id: 1,

path: '',

isOpen: true,

disabled: true,

@@ -242,6 +242,22 @@ let menu = {

disabled: true

}

]

+ },

+ {

+ name: `${i18n.$t('Statistics manage')}`,

+ id: 0,

+ path: '',

+ isOpen: true,

+ disabled: true,

+ icon: 'fa-server',

+ children: [

+ {

+ name: "Statistics",

+ path: 'statistics',

+ id: 0,

+ disabled: true

+ }

+ ]

}

]

}

diff --git a/escheduler-ui/src/js/module/i18n/locale/en_US.js b/escheduler-ui/src/js/module/i18n/locale/en_US.js

index 50c33c061b..79d66cf147 100644

--- a/escheduler-ui/src/js/module/i18n/locale/en_US.js

+++ b/escheduler-ui/src/js/module/i18n/locale/en_US.js

@@ -395,6 +395,7 @@ export default {

'Last2Days': 'Last2Days',

'Last3Days': 'Last3Days',

'Last7Days': 'Last7Days',

+ 'ThisWeek': 'ThisWeek',

'LastWeek': 'LastWeek',

'LastMonday': 'LastMonday',

'LastTuesday': 'LastTuesday',

@@ -403,6 +404,7 @@ export default {

'LastFriday': 'LastFriday',

'LastSaturday': 'LastSaturday',

'LastSunday': 'LastSunday',

+ 'ThisMonth': 'ThisMonth',

'LastMonth': 'LastMonth',

'LastMonthBegin': 'LastMonthBegin',

'LastMonthEnd': 'LastMonthEnd',

@@ -456,5 +458,18 @@ export default {

'Post Statement': 'Post Statement',

'Statement cannot be empty': 'Statement cannot be empty',

'Process Define Count': 'Process Define Count',

- 'Process Instance Running Count': 'Process Instance Running Count'

+ 'Process Instance Running Count': 'Process Instance Running Count',

+ 'process number of waiting for running': 'process number of waiting for running',

+ 'failure command number': 'failure command number',

+ 'tasks number of waiting running': 'tasks number of waiting running',

+ 'task number of ready to kill': 'task number of ready to kill',

+ 'Statistics manage': 'Statistics manage',

+ 'statistics': 'statistics',

+ 'select tenant':'select tenant',

+ 'Please enter Principal':'Please enter Principal',

+ 'The start time must not be the same as the end': 'The start time must not be the same as the end',

+ 'Startup parameter': 'Startup parameter',

+ 'Startup type': 'Startup type',

+ 'warning of timeout': 'warning of timeout',

+ 'Complement range': 'Complement range'

}

diff --git a/escheduler-ui/src/js/module/i18n/locale/zh_CN.js b/escheduler-ui/src/js/module/i18n/locale/zh_CN.js

index d8ea823048..93d145bc8b 100644

--- a/escheduler-ui/src/js/module/i18n/locale/zh_CN.js

+++ b/escheduler-ui/src/js/module/i18n/locale/zh_CN.js

@@ -237,7 +237,7 @@ export default {

'Recovery Failed': '恢复失败',

'Stop': '停止',

'Pause': '暂停',

- 'Recovery Suspend': '恢复暂停',

+ 'Recovery Suspend': '恢复运行',

'Gantt': '甘特图',

'Name': '名称',

'Node Type': '节点类型',

@@ -282,7 +282,7 @@ export default {

'Start Process': '启动工作流',

'Execute from the current node': '从当前节点开始执行',

'Recover tolerance fault process': '恢复被容错的工作流',

- 'Resume the suspension process': '恢复暂停流程',

+ 'Resume the suspension process': '恢复运行流程',

'Execute from the failed nodes': '从失败节点开始执行',

'Complement Data': '补数',

'Scheduling execution': '调度执行',

@@ -395,6 +395,7 @@ export default {

'Last2Days': '前两天',

'Last3Days': '前三天',

'Last7Days': '前七天',

+ 'ThisWeek': '本周',

'LastWeek': '上周',

'LastMonday': '上周一',

'LastTuesday': '上周二',

@@ -403,6 +404,7 @@ export default {

'LastFriday': '上周五',

'LastSaturday': '上周六',

'LastSunday': '上周日',

+ 'ThisMonth': '本月',

'LastMonth': '上月',

'LastMonthBegin': '上月初',

'LastMonthEnd': '上月末',

@@ -458,4 +460,17 @@ export default {

'Process Define Count': '流程定义个数',

'Process Instance Running Count': '运行流程实例个数',

'Please select a queue': '请选择队列',

+ 'process number of waiting for running': '待执行的流程数',

+ 'failure command number': '执行失败的命令数',

+ 'tasks number of waiting running': '待运行任务数',

+ 'task number of ready to kill': '待杀死任务数',

+ 'Statistics manage': '统计管理',

+ 'statistics': '统计',

+ 'select tenant':'选择租户',

+ 'Please enter Principal':'请输入Principal',

+ 'The start time must not be the same as the end': '开始时间和结束时间不能相同',

+ 'Startup parameter': '启动参数',

+ 'Startup type': '启动类型',

+ 'warning of timeout': '超时告警',

+ 'Complement range': '补数范围'

}

diff --git a/escheduler-ui/src/js/module/mixin/disabledState.js b/escheduler-ui/src/js/module/mixin/disabledState.js

index 7c0b1f8e92..4b814a1908 100644

--- a/escheduler-ui/src/js/module/mixin/disabledState.js

+++ b/escheduler-ui/src/js/module/mixin/disabledState.js

@@ -28,11 +28,11 @@ export default {

}

},

created () {

- this.isDetails = Permissions.getAuth() ? this.store.state.dag.isDetails : true

+ this.isDetails =this.store.state.dag.isDetails// Permissions.getAuth() ? this.store.state.dag.isDetails : true

},

computed: {

_isDetails () {

- return this.isDetails ? 'icon-disabled' : ''

+ return ''// this.isDetails ? 'icon-disabled' : ''

}

}

}

diff --git a/escheduler-ui/src/view/common/meta.inc b/escheduler-ui/src/view/common/meta.inc

index 62cdea7f8b..fc307dd487 100644

--- a/escheduler-ui/src/view/common/meta.inc

+++ b/escheduler-ui/src/view/common/meta.inc

@@ -21,4 +21,4 @@

\ No newline at end of file

+

diff --git a/install.sh b/install.sh

index 6fd9e83de2..57cccdf22a 100644

--- a/install.sh

+++ b/install.sh

@@ -106,28 +106,47 @@ sslEnable="true"

# 下载Excel路径

xlsFilePath="/tmp/xls"

+# 企业微信企业ID配置

+enterpriseWechatCorpId="xxxxxxxxxx"

+

+# 企业微信应用Secret配置

+enterpriseWechatSecret="xxxxxxxxxx"

+

+# 企业微信应用AgentId配置

+enterpriseWechatAgentId="xxxxxxxxxx"

+

+# 企业微信用户配置,多个用户以,分割

+enterpriseWechatUsers="xxxxx,xxxxx"

+

#是否启动监控自启动脚本

monitorServerState="false"

-# hadoop 配置

-# 是否启动hdfs,如果启动则为true,需要配置以下hadoop相关参数;

-# 不启动设置为false,如果为false,以下配置不需要修改

-# 特别注意:如果启动hdfs,需要自行创建hdfs根路径,也就是install.sh中的 hdfsPath

-hdfsStartupSate="false"

+# 资源中心上传选择存储方式:HDFS,S3,NONE

+resUploadStartupType="NONE"

+

+# 如果resUploadStartupType为HDFS,defaultFS写namenode地址,支持HA,需要将core-site.xml和hdfs-site.xml放到conf目录下

+# 如果是S3,则写S3地址,比如说:s3a://escheduler,注意,一定要创建根目录/escheduler

+defaultFS="hdfs://mycluster:8020"

-# namenode地址,支持HA,需要将core-site.xml和hdfs-site.xml放到conf目录下

-namenodeFs="hdfs://mycluster:8020"

+# 如果配置了S3,则需要有以下配置

+s3Endpoint="http://192.168.199.91:9010"

+s3AccessKey="A3DXS30FO22544RE"

+s3SecretKey="OloCLq3n+8+sdPHUhJ21XrSxTC+JK"

-# resourcemanager HA配置,如果是单resourcemanager,这里为空即可

+# resourcemanager HA配置,如果是单resourcemanager,这里为yarnHaIps=""

yarnHaIps="192.168.xx.xx,192.168.xx.xx"

# 如果是单 resourcemanager,只需要配置一个主机名称,如果是resourcemanager HA,则默认配置就好

singleYarnIp="ark1"

-# hdfs根路径,根路径的owner必须是部署用户

+# hdfs根路径,根路径的owner必须是部署用户。1.1.0之前版本不会自动创建hdfs根目录,需要自行创建

hdfsPath="/escheduler"

+# 拥有在hdfs根路径/下创建目录权限的用户

+# 注意:如果开启了kerberos,则直接hdfsRootUser="",就可以

+hdfsRootUser="hdfs"

+

# common 配置

# 程序路径

programPath="/tmp/escheduler"

@@ -147,6 +166,19 @@ resSuffixs="txt,log,sh,conf,cfg,py,java,sql,hql,xml"

# 开发状态,如果是true,对于SHELL脚本可以在execPath目录下查看封装后的SHELL脚本,如果是false则执行完成直接删除

devState="true"

+# kerberos 配置

+# kerberos 是否启动

+kerberosStartUp="false"

+

+# kdc krb5 配置文件路径

+krb5ConfPath="$installPath/conf/krb5.conf"

+

+# keytab 用户名

+keytabUserName="hdfs-mycluster@ESZ.COM"

+

+# 用户 keytab路径

+keytabPath="$installPath/conf/hdfs.headless.keytab"

+

# zk 配置

# zk根目录

zkRoot="/escheduler"

@@ -170,7 +202,7 @@ workersLock="/escheduler/lock/workers"

mastersFailover="/escheduler/lock/failover/masters"

# zk worker容错分布式锁

-workersFailover="/escheduler/lock/failover/masters"

+workersFailover="/escheduler/lock/failover/workers"

# zk master启动容错分布式锁

mastersStartupFailover="/escheduler/lock/failover/startup-masters"

@@ -257,18 +289,27 @@ sed -i ${txt} "s#org.quartz.dataSource.myDs.user.*#org.quartz.dataSource.myDs.us

sed -i ${txt} "s#org.quartz.dataSource.myDs.password.*#org.quartz.dataSource.myDs.password=${mysqlPassword}#g" conf/quartz.properties

-sed -i ${txt} "s#fs.defaultFS.*#fs.defaultFS=${namenodeFs}#g" conf/common/hadoop/hadoop.properties

+sed -i ${txt} "s#fs.defaultFS.*#fs.defaultFS=${defaultFS}#g" conf/common/hadoop/hadoop.properties

+sed -i ${txt} "s#fs.s3a.endpoint.*#fs.s3a.endpoint=${s3Endpoint}#g" conf/common/hadoop/hadoop.properties

+sed -i ${txt} "s#fs.s3a.access.key.*#fs.s3a.access.key=${s3AccessKey}#g" conf/common/hadoop/hadoop.properties

+sed -i ${txt} "s#fs.s3a.secret.key.*#fs.s3a.secret.key=${s3SecretKey}#g" conf/common/hadoop/hadoop.properties

sed -i ${txt} "s#yarn.resourcemanager.ha.rm.ids.*#yarn.resourcemanager.ha.rm.ids=${yarnHaIps}#g" conf/common/hadoop/hadoop.properties

sed -i ${txt} "s#yarn.application.status.address.*#yarn.application.status.address=http://${singleYarnIp}:8088/ws/v1/cluster/apps/%s#g" conf/common/hadoop/hadoop.properties

+

sed -i ${txt} "s#data.basedir.path.*#data.basedir.path=${programPath}#g" conf/common/common.properties

sed -i ${txt} "s#data.download.basedir.path.*#data.download.basedir.path=${downloadPath}#g" conf/common/common.properties

sed -i ${txt} "s#process.exec.basepath.*#process.exec.basepath=${execPath}#g" conf/common/common.properties

+sed -i ${txt} "s#hdfs.root.user.*#hdfs.root.user=${hdfsRootUser}#g" conf/common/common.properties

sed -i ${txt} "s#data.store2hdfs.basepath.*#data.store2hdfs.basepath=${hdfsPath}#g" conf/common/common.properties

-sed -i ${txt} "s#hdfs.startup.state.*#hdfs.startup.state=${hdfsStartupSate}#g" conf/common/common.properties

+sed -i ${txt} "s#res.upload.startup.type.*#res.upload.startup.type=${resUploadStartupType}#g" conf/common/common.properties

sed -i ${txt} "s#escheduler.env.path.*#escheduler.env.path=${shellEnvPath}#g" conf/common/common.properties

sed -i ${txt} "s#resource.view.suffixs.*#resource.view.suffixs=${resSuffixs}#g" conf/common/common.properties

sed -i ${txt} "s#development.state.*#development.state=${devState}#g" conf/common/common.properties

+sed -i ${txt} "s#hadoop.security.authentication.startup.state.*#hadoop.security.authentication.startup.state=${kerberosStartUp}#g" conf/common/common.properties

+sed -i ${txt} "s#java.security.krb5.conf.path.*#java.security.krb5.conf.path=${krb5ConfPath}#g" conf/common/common.properties

+sed -i ${txt} "s#login.user.keytab.username.*#login.user.keytab.username=${keytabUserName}#g" conf/common/common.properties

+sed -i ${txt} "s#login.user.keytab.path.*#login.user.keytab.path=${keytabPath}#g" conf/common/common.properties

sed -i ${txt} "s#zookeeper.quorum.*#zookeeper.quorum=${zkQuorum}#g" conf/zookeeper.properties

sed -i ${txt} "s#zookeeper.escheduler.root.*#zookeeper.escheduler.root=${zkRoot}#g" conf/zookeeper.properties

@@ -290,7 +331,7 @@ sed -i ${txt} "s#master.exec.task.number.*#master.exec.task.number=${masterExecT

sed -i ${txt} "s#master.heartbeat.interval.*#master.heartbeat.interval=${masterHeartbeatInterval}#g" conf/master.properties

sed -i ${txt} "s#master.task.commit.retryTimes.*#master.task.commit.retryTimes=${masterTaskCommitRetryTimes}#g" conf/master.properties

sed -i ${txt} "s#master.task.commit.interval.*#master.task.commit.interval=${masterTaskCommitInterval}#g" conf/master.properties

-sed -i ${txt} "s#master.max.cpuload.avg.*#master.max.cpuload.avg=${masterMaxCpuLoadAvg}#g" conf/master.properties

+#sed -i ${txt} "s#master.max.cpuload.avg.*#master.max.cpuload.avg=${masterMaxCpuLoadAvg}#g" conf/master.properties

sed -i ${txt} "s#master.reserved.memory.*#master.reserved.memory=${masterReservedMemory}#g" conf/master.properties

@@ -317,6 +358,10 @@ sed -i ${txt} "s#mail.passwd.*#mail.passwd=${mailPassword}#g" conf/alert.propert

sed -i ${txt} "s#mail.smtp.starttls.enable.*#mail.smtp.starttls.enable=${starttlsEnable}#g" conf/alert.properties

sed -i ${txt} "s#mail.smtp.ssl.enable.*#mail.smtp.ssl.enable=${sslEnable}#g" conf/alert.properties

sed -i ${txt} "s#xls.file.path.*#xls.file.path=${xlsFilePath}#g" conf/alert.properties

+sed -i ${txt} "s#enterprise.wechat.corp.id.*#enterprise.wechat.corp.id=${enterpriseWechatCorpId}#g" conf/alert.properties

+sed -i ${txt} "s#enterprise.wechat.secret.*#enterprise.wechat.secret=${enterpriseWechatSecret}#g" conf/alert.properties

+sed -i ${txt} "s#enterprise.wechat.agent.id.*#enterprise.wechat.agent.id=${enterpriseWechatAgentId}#g" conf/alert.properties

+sed -i ${txt} "s#enterprise.wechat.users.*#enterprise.wechat.users=${enterpriseWechatUsers}#g" conf/alert.properties

sed -i ${txt} "s#installPath.*#installPath=${installPath}#g" conf/config/install_config.conf

diff --git a/package.xml b/package.xml

index 4976f061fd..619dfb07cf 100644

--- a/package.xml

+++ b/package.xml

@@ -34,6 +34,14 @@

.

+

+ escheduler-ui/dist

+

+ **/*.*

+

+ ./ui

+

+

sql

diff --git a/pom.xml b/pom.xml

index e993636be4..a97650aafb 100644

--- a/pom.xml

+++ b/pom.xml

@@ -3,7 +3,7 @@

4.0.0

cn.analysys

escheduler

- 1.0.3-SNAPSHOT

+ 1.1.0-SNAPSHOT

pom

escheduler

http://maven.apache.org

diff --git a/sql/create/release-1.0.0_schema/mysql/escheduler_dml.sql b/sql/create/release-1.0.0_schema/mysql/escheduler_dml.sql

index b7f25d76e1..b075475270 100644

--- a/sql/create/release-1.0.0_schema/mysql/escheduler_dml.sql

+++ b/sql/create/release-1.0.0_schema/mysql/escheduler_dml.sql

@@ -1,5 +1,5 @@

-- Records of t_escheduler_user,user : admin , password : escheduler123

-INSERT INTO `t_escheduler_user` VALUES ('1', 'admin', '055a97b5fcd6d120372ad1976518f371', '0', '825193156@qq.com', '15001335629', '0', '2018-03-27 15:48:50', '2018-10-24 17:40:22');

+INSERT INTO `t_escheduler_user` VALUES ('1', 'admin', '055a97b5fcd6d120372ad1976518f371', '0', 'xxx@qq.com', 'xx', '0', '2018-03-27 15:48:50', '2018-10-24 17:40:22');

INSERT INTO `t_escheduler_alertgroup` VALUES (1, 'escheduler管理员告警组', '0', 'escheduler管理员告警组','2018-11-29 10:20:39', '2018-11-29 10:20:39');

INSERT INTO `t_escheduler_relation_user_alertgroup` VALUES ('1', '1', '1', '2018-11-29 10:22:33', '2018-11-29 10:22:33');

diff --git a/sql/escheduler.sql b/sql/escheduler.sql

deleted file mode 100644

index 774de10e42..0000000000

--- a/sql/escheduler.sql

+++ /dev/null

@@ -1,436 +0,0 @@

-/*

-Navicat MySQL Data Transfer

-

-Source Server : xx.xx

-Source Server Version : 50725

-Source Host : 192.168.xx.xx:3306

-Source Database : escheduler

-

-Target Server Type : MYSQL

-Target Server Version : 50725

-File Encoding : 65001

-

-Date: 2019-03-23 11:47:30

-*/

-

-SET FOREIGN_KEY_CHECKS=0;

-

--- ----------------------------

--- Table structure for t_escheduler_alert

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_alert`;

-CREATE TABLE `t_escheduler_alert` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `title` varchar(64) DEFAULT NULL COMMENT '消息标题',

- `show_type` tinyint(4) DEFAULT NULL COMMENT '发送格式,0是TABLE,1是TEXT',

- `content` text COMMENT '消息内容(可以是邮件,可以是短信。邮件是JSON Map存放,短信是字符串)',

- `alert_type` tinyint(4) DEFAULT NULL COMMENT '0是邮件,1是短信',

- `alert_status` tinyint(4) DEFAULT '0' COMMENT '0是待执行,1是执行成功,2执行失败',

- `log` text COMMENT '执行日志',

- `alertgroup_id` int(11) DEFAULT NULL COMMENT '发送组',

- `receivers` text COMMENT '收件人',

- `receivers_cc` text COMMENT '抄送人',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_alertgroup

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_alertgroup`;

-CREATE TABLE `t_escheduler_alertgroup` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `group_name` varchar(255) DEFAULT NULL COMMENT '组名称',

- `group_type` tinyint(4) DEFAULT NULL COMMENT '组类型(邮件0,短信1...)',

- `desc` varchar(255) DEFAULT NULL COMMENT '备注',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_command

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_command`;

-CREATE TABLE `t_escheduler_command` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `command_type` tinyint(4) DEFAULT NULL COMMENT '命令类型:0 启动工作流,1 从当前节点开始执行,2 恢复被容错的工作流,3 恢复暂停流程,4 从失败节点开始执行,5 补数,6 调度,7 重跑,8 暂停,9 停止,10 恢复等待线程',

- `process_definition_id` int(11) DEFAULT NULL COMMENT '流程定义id',

- `command_param` text COMMENT '命令的参数(json格式)',

- `task_depend_type` tinyint(4) DEFAULT NULL COMMENT '节点依赖类型:0 当前节点,1 向前执行,2 向后执行',

- `failure_strategy` tinyint(4) DEFAULT '0' COMMENT '失败策略:0结束,1继续',

- `warning_type` tinyint(4) DEFAULT '0' COMMENT '告警类型:0 不发,1 流程成功发,2 流程失败发,3 成功失败都发',

- `warning_group_id` int(11) DEFAULT NULL COMMENT '告警组',

- `schedule_time` datetime DEFAULT NULL COMMENT '预期运行时间',

- `start_time` datetime DEFAULT NULL COMMENT '开始时间',

- `executor_id` int(11) DEFAULT NULL COMMENT '执行用户id',

- `dependence` varchar(255) DEFAULT NULL COMMENT '依赖字段',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- `process_instance_priority` int(11) DEFAULT NULL COMMENT '流程实例优先级:0 Highest,1 High,2 Medium,3 Low,4 Lowest',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_datasource

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_datasource`;

-CREATE TABLE `t_escheduler_datasource` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `name` varchar(64) NOT NULL COMMENT '数据源名称',

- `note` varchar(256) DEFAULT NULL COMMENT '描述',

- `type` tinyint(4) NOT NULL COMMENT '数据源类型:0 mysql,1 postgresql,2 hive,3 spark',

- `user_id` int(11) NOT NULL COMMENT '创建用户id',

- `connection_params` text NOT NULL COMMENT '连接参数(json格式)',

- `create_time` datetime NOT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_master_server

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_master_server`;

-CREATE TABLE `t_escheduler_master_server` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `host` varchar(45) DEFAULT NULL COMMENT 'ip',

- `port` int(11) DEFAULT NULL COMMENT '进程号',

- `zk_directory` varchar(64) DEFAULT NULL COMMENT 'zk注册目录',

- `res_info` varchar(256) DEFAULT NULL COMMENT '集群资源信息:json格式{"cpu":xxx,"memroy":xxx}',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `last_heartbeat_time` datetime DEFAULT NULL COMMENT '最后心跳时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_process_definition

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_process_definition`;

-CREATE TABLE `t_escheduler_process_definition` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `name` varchar(255) DEFAULT NULL COMMENT '流程定义名称',

- `version` int(11) DEFAULT NULL COMMENT '流程定义版本',

- `release_state` tinyint(4) DEFAULT NULL COMMENT '流程定义的发布状态:0 未上线 1已上线',

- `project_id` int(11) DEFAULT NULL COMMENT '项目id',

- `user_id` int(11) DEFAULT NULL COMMENT '流程定义所属用户id',

- `process_definition_json` longtext COMMENT '流程定义json串',

- `desc` text COMMENT '流程定义描述',

- `global_params` text COMMENT '全局参数',

- `flag` tinyint(4) DEFAULT NULL COMMENT '流程是否可用\r\n:0 不可用\r\n,1 可用',

- `locations` text COMMENT '节点坐标信息',

- `connects` text COMMENT '节点连线信息',

- `receivers` text COMMENT '收件人',

- `receivers_cc` text COMMENT '抄送人',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`),

- KEY `process_definition_index` (`project_id`,`id`) USING BTREE

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_process_instance

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_process_instance`;

-CREATE TABLE `t_escheduler_process_instance` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `name` varchar(255) DEFAULT NULL COMMENT '流程实例名称',

- `process_definition_id` int(11) DEFAULT NULL COMMENT '流程定义id',

- `state` tinyint(4) DEFAULT NULL COMMENT '流程实例状态:0 提交成功,1 正在运行,2 准备暂停,3 暂停,4 准备停止,5 停止,6 失败,7 成功,8 需要容错,9 kill,10 等待线程,11 等待依赖完成',

- `recovery` tinyint(4) DEFAULT NULL COMMENT '流程实例容错标识:0 正常,1 需要被容错重启',

- `start_time` datetime DEFAULT NULL COMMENT '流程实例开始时间',

- `end_time` datetime DEFAULT NULL COMMENT '流程实例结束时间',

- `run_times` int(11) DEFAULT NULL COMMENT '流程实例运行次数',

- `host` varchar(45) DEFAULT NULL COMMENT '流程实例所在的机器',

- `command_type` tinyint(4) DEFAULT NULL COMMENT '命令类型:0 启动工作流,1 从当前节点开始执行,2 恢复被容错的工作流,3 恢复暂停流程,4 从失败节点开始执行,5 补数,6 调度,7 重跑,8 暂停,9 停止,10 恢复等待线程',

- `command_param` text COMMENT '命令的参数(json格式)',

- `task_depend_type` tinyint(4) DEFAULT NULL COMMENT '节点依赖类型:0 当前节点,1 向前执行,2 向后执行',

- `max_try_times` tinyint(4) DEFAULT '0' COMMENT '最大重试次数',

- `failure_strategy` tinyint(4) DEFAULT '0' COMMENT '失败策略 0 失败后结束,1 失败后继续',

- `warning_type` tinyint(4) DEFAULT '0' COMMENT '告警类型:0 不发,1 流程成功发,2 流程失败发,3 成功失败都发',

- `warning_group_id` int(11) DEFAULT NULL COMMENT '告警组id',

- `schedule_time` datetime DEFAULT NULL COMMENT '预期运行时间',

- `command_start_time` datetime DEFAULT NULL COMMENT '开始命令时间',

- `global_params` text COMMENT '全局参数(固化流程定义的参数)',

- `process_instance_json` longtext COMMENT '流程实例json(copy的流程定义的json)',

- `flag` tinyint(4) DEFAULT '1' COMMENT '是否可用,1 可用,0不可用',

- `update_time` timestamp NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

- `is_sub_process` int(11) DEFAULT '0' COMMENT '是否是子工作流 1 是,0 不是',

- `executor_id` int(11) NOT NULL COMMENT '命令执行者',

- `locations` text COMMENT '节点坐标信息',

- `connects` text COMMENT '节点连线信息',

- `history_cmd` text COMMENT '历史命令,记录所有对流程实例的操作',

- `dependence_schedule_times` text COMMENT '依赖节点的预估时间',

- `process_instance_priority` int(11) DEFAULT NULL COMMENT '流程实例优先级:0 Highest,1 High,2 Medium,3 Low,4 Lowest',

- PRIMARY KEY (`id`),

- KEY `process_instance_index` (`process_definition_id`,`id`) USING BTREE,

- KEY `start_time_index` (`start_time`) USING BTREE

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_project

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_project`;

-CREATE TABLE `t_escheduler_project` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `name` varchar(100) DEFAULT NULL COMMENT '项目名称',

- `desc` varchar(200) DEFAULT NULL COMMENT '项目描述',

- `user_id` int(11) DEFAULT NULL COMMENT '所属用户',

- `flag` tinyint(4) DEFAULT '1' COMMENT '是否可用 1 可用,0 不可用',

- `create_time` datetime DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

- `update_time` datetime DEFAULT CURRENT_TIMESTAMP COMMENT '修改时间',

- PRIMARY KEY (`id`),

- KEY `user_id_index` (`user_id`) USING BTREE

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_queue

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_queue`;

-CREATE TABLE `t_escheduler_queue` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `queue_name` varchar(64) DEFAULT NULL COMMENT '队列名称',

- `queue` varchar(64) DEFAULT NULL COMMENT 'yarn队列名称',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_relation_datasource_user

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_relation_datasource_user`;

-CREATE TABLE `t_escheduler_relation_datasource_user` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `user_id` int(11) NOT NULL COMMENT '用户id',

- `datasource_id` int(11) DEFAULT NULL COMMENT '数据源id',

- `perm` int(11) DEFAULT '1' COMMENT '权限',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_relation_process_instance

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_relation_process_instance`;

-CREATE TABLE `t_escheduler_relation_process_instance` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `parent_process_instance_id` int(11) DEFAULT NULL COMMENT '父流程实例id',

- `parent_task_instance_id` int(11) DEFAULT NULL COMMENT '父任务实例id',

- `process_instance_id` int(11) DEFAULT NULL COMMENT '子流程实例id',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_relation_project_user

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_relation_project_user`;

-CREATE TABLE `t_escheduler_relation_project_user` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `user_id` int(11) NOT NULL COMMENT '用户id',

- `project_id` int(11) DEFAULT NULL COMMENT '项目id',

- `perm` int(11) DEFAULT '1' COMMENT '权限',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`),

- KEY `user_id_index` (`user_id`) USING BTREE

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_relation_resources_user

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_relation_resources_user`;

-CREATE TABLE `t_escheduler_relation_resources_user` (

- `id` int(11) NOT NULL AUTO_INCREMENT,

- `user_id` int(11) NOT NULL COMMENT '用户id',

- `resources_id` int(11) DEFAULT NULL COMMENT '资源id',

- `perm` int(11) DEFAULT '1' COMMENT '权限',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_relation_udfs_user

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_relation_udfs_user`;

-CREATE TABLE `t_escheduler_relation_udfs_user` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `user_id` int(11) NOT NULL COMMENT '用户id',

- `udf_id` int(11) DEFAULT NULL COMMENT 'udf id',

- `perm` int(11) DEFAULT '1' COMMENT '权限',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_relation_user_alertgroup

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_relation_user_alertgroup`;

-CREATE TABLE `t_escheduler_relation_user_alertgroup` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `alertgroup_id` int(11) DEFAULT NULL COMMENT '组消息id',

- `user_id` int(11) DEFAULT NULL COMMENT '用户id',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB AUTO_INCREMENT=2 DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_resources

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_resources`;

-CREATE TABLE `t_escheduler_resources` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `alias` varchar(64) DEFAULT NULL COMMENT '别名',

- `file_name` varchar(64) DEFAULT NULL COMMENT '文件名',

- `desc` varchar(256) DEFAULT NULL COMMENT '描述',

- `user_id` int(11) DEFAULT NULL COMMENT '用户id',

- `type` tinyint(4) DEFAULT NULL COMMENT '资源类型,0 FILE,1 UDF',

- `size` bigint(20) DEFAULT NULL COMMENT '资源大小',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_schedules

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_schedules`;

-CREATE TABLE `t_escheduler_schedules` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `process_definition_id` int(11) NOT NULL COMMENT '流程定义id',

- `start_time` datetime NOT NULL COMMENT '调度开始时间',

- `end_time` datetime NOT NULL COMMENT '调度结束时间',

- `crontab` varchar(256) NOT NULL COMMENT 'crontab 表达式',

- `failure_strategy` tinyint(4) NOT NULL COMMENT '失败策略: 0 结束,1 继续',

- `user_id` int(11) NOT NULL COMMENT '用户id',

- `release_state` tinyint(4) NOT NULL COMMENT '状态:0 未上线,1 上线',

- `warning_type` tinyint(4) NOT NULL COMMENT '告警类型:0 不发,1 流程成功发,2 流程失败发,3 成功失败都发',

- `warning_group_id` int(11) DEFAULT NULL COMMENT '告警组id',

- `process_instance_priority` int(11) DEFAULT NULL COMMENT '流程实例优先级:0 Highest,1 High,2 Medium,3 Low,4 Lowest',

- `create_time` datetime NOT NULL COMMENT '创建时间',

- `update_time` datetime NOT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_session

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_session`;

-CREATE TABLE `t_escheduler_session` (

- `id` varchar(64) NOT NULL COMMENT '主键',

- `user_id` int(11) DEFAULT NULL COMMENT '用户id',

- `ip` varchar(45) DEFAULT NULL COMMENT '登录ip',

- `last_login_time` datetime DEFAULT NULL COMMENT '最后登录时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_task_instance

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_task_instance`;

-CREATE TABLE `t_escheduler_task_instance` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `name` varchar(255) DEFAULT NULL COMMENT '任务名称',

- `task_type` varchar(64) DEFAULT NULL COMMENT '任务类型',

- `process_definition_id` int(11) DEFAULT NULL COMMENT '流程定义id',

- `process_instance_id` int(11) DEFAULT NULL COMMENT '流程实例id',

- `task_json` longtext COMMENT '任务节点json',

- `state` tinyint(4) DEFAULT NULL COMMENT '任务实例状态:0 提交成功,1 正在运行,2 准备暂停,3 暂停,4 准备停止,5 停止,6 失败,7 成功,8 需要容错,9 kill,10 等待线程,11 等待依赖完成',

- `submit_time` datetime DEFAULT NULL COMMENT '任务提交时间',

- `start_time` datetime DEFAULT NULL COMMENT '任务开始时间',

- `end_time` datetime DEFAULT NULL COMMENT '任务结束时间',

- `host` varchar(45) DEFAULT NULL COMMENT '执行任务的机器',

- `execute_path` varchar(200) DEFAULT NULL COMMENT '任务执行路径',

- `log_path` varchar(200) DEFAULT NULL COMMENT '任务日志路径',

- `alert_flag` tinyint(4) DEFAULT NULL COMMENT '是否告警',

- `retry_times` int(4) DEFAULT '0' COMMENT '重试次数',

- `pid` int(4) DEFAULT NULL COMMENT '进程pid',

- `app_link` varchar(255) DEFAULT NULL COMMENT 'yarn app id',

- `flag` tinyint(4) DEFAULT '1' COMMENT '是否可用:0 不可用,1 可用',

- `retry_interval` int(4) DEFAULT NULL COMMENT '重试间隔',

- `max_retry_times` int(2) DEFAULT NULL COMMENT '最大重试次数',

- `task_instance_priority` int(11) DEFAULT NULL COMMENT '任务实例优先级:0 Highest,1 High,2 Medium,3 Low,4 Lowest',

- PRIMARY KEY (`id`),

- KEY `process_instance_id` (`process_instance_id`) USING BTREE,

- KEY `task_instance_index` (`process_definition_id`,`process_instance_id`) USING BTREE,

- CONSTRAINT `foreign_key_instance_id` FOREIGN KEY (`process_instance_id`) REFERENCES `t_escheduler_process_instance` (`id`) ON DELETE CASCADE

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_tenant

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_tenant`;

-CREATE TABLE `t_escheduler_tenant` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `tenant_code` varchar(64) DEFAULT NULL COMMENT '租户编码',

- `tenant_name` varchar(64) DEFAULT NULL COMMENT '租户名称',

- `desc` varchar(256) DEFAULT NULL COMMENT '描述',

- `queue_id` int(11) DEFAULT NULL COMMENT '队列id',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_udfs

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_udfs`;

-CREATE TABLE `t_escheduler_udfs` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `user_id` int(11) NOT NULL COMMENT '用户id',

- `func_name` varchar(100) NOT NULL COMMENT 'UDF函数名',

- `class_name` varchar(255) NOT NULL COMMENT '类名',

- `type` tinyint(4) NOT NULL COMMENT 'Udf函数类型',

- `arg_types` varchar(255) DEFAULT NULL COMMENT '参数',

- `database` varchar(255) DEFAULT NULL COMMENT '库名',

- `desc` varchar(255) DEFAULT NULL COMMENT '描述',

- `resource_id` int(11) NOT NULL COMMENT '资源id',

- `resource_name` varchar(255) NOT NULL COMMENT '资源名称',

- `create_time` datetime NOT NULL COMMENT '创建时间',

- `update_time` datetime NOT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_user

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_user`;

-CREATE TABLE `t_escheduler_user` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '用户id',

- `user_name` varchar(64) DEFAULT NULL COMMENT '用户名',

- `user_password` varchar(64) DEFAULT NULL COMMENT '用户密码',

- `user_type` tinyint(4) DEFAULT NULL COMMENT '用户类型:0 管理员,1 普通用户',

- `email` varchar(64) DEFAULT NULL COMMENT '邮箱',

- `phone` varchar(11) DEFAULT NULL COMMENT '手机',

- `tenant_id` int(11) DEFAULT NULL COMMENT '管理员0,普通用户所属租户id',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `update_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`),

- UNIQUE KEY `user_name_unique` (`user_name`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- ----------------------------

--- Table structure for t_escheduler_worker_server

--- ----------------------------

-DROP TABLE IF EXISTS `t_escheduler_worker_server`;

-CREATE TABLE `t_escheduler_worker_server` (

- `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',

- `host` varchar(45) DEFAULT NULL COMMENT 'ip',

- `port` int(11) DEFAULT NULL COMMENT '进程号',

- `zk_directory` varchar(64) CHARACTER SET utf8 COLLATE utf8_bin DEFAULT NULL COMMENT 'zk注册目录',

- `res_info` varchar(255) DEFAULT NULL COMMENT '集群资源信息:json格式{"cpu":xxx,"memroy":xxx}',

- `create_time` datetime DEFAULT NULL COMMENT '创建时间',

- `last_heartbeat_time` datetime DEFAULT NULL COMMENT '更新时间',

- PRIMARY KEY (`id`)

-) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-

--- Records of t_escheduler_user,user : admin , password : escheduler123

-INSERT INTO `t_escheduler_user` VALUES ('1', 'admin', '055a97b5fcd6d120372ad1976518f371', '0', 'xxx@qq.com', 'xxxx', '0', '2018-03-27 15:48:50', '2018-10-24 17:40:22');

-INSERT INTO `t_escheduler_alertgroup` VALUES (1, 'escheduler管理员告警组', '0', 'escheduler管理员告警组','2018-11-29 10:20:39', '2018-11-29 10:20:39');

-INSERT INTO `t_escheduler_relation_user_alertgroup` VALUES ('1', '1', '1', '2018-11-29 10:22:33', '2018-11-29 10:22:33');

-

--- Records of t_escheduler_queue,default queue name : default

-INSERT INTO `t_escheduler_queue` VALUES ('1', 'default', 'default');

-

-

diff --git a/sql/quartz.sql b/sql/quartz.sql

deleted file mode 100644

index 22754b39dc..0000000000

--- a/sql/quartz.sql

+++ /dev/null

@@ -1,179 +0,0 @@

- #

- # In your Quartz properties file, you'll need to set

- # org.quartz.jobStore.driverDelegateClass = org.quartz.impl.jdbcjobstore.StdJDBCDelegate

- #

- #

- # By: Ron Cordell - roncordell

- # I didn't see this anywhere, so I thought I'd post it here. This is the script from Quartz to create the tables in a MySQL database, modified to use INNODB instead of MYISAM.

-

- DROP TABLE IF EXISTS QRTZ_FIRED_TRIGGERS;

- DROP TABLE IF EXISTS QRTZ_PAUSED_TRIGGER_GRPS;

- DROP TABLE IF EXISTS QRTZ_SCHEDULER_STATE;

- DROP TABLE IF EXISTS QRTZ_LOCKS;

- DROP TABLE IF EXISTS QRTZ_SIMPLE_TRIGGERS;

- DROP TABLE IF EXISTS QRTZ_SIMPROP_TRIGGERS;

- DROP TABLE IF EXISTS QRTZ_CRON_TRIGGERS;

- DROP TABLE IF EXISTS QRTZ_BLOB_TRIGGERS;

- DROP TABLE IF EXISTS QRTZ_TRIGGERS;

- DROP TABLE IF EXISTS QRTZ_JOB_DETAILS;

- DROP TABLE IF EXISTS QRTZ_CALENDARS;

-

- CREATE TABLE QRTZ_JOB_DETAILS(

- SCHED_NAME VARCHAR(120) NOT NULL,

- JOB_NAME VARCHAR(200) NOT NULL,

- JOB_GROUP VARCHAR(200) NOT NULL,

- DESCRIPTION VARCHAR(250) NULL,

- JOB_CLASS_NAME VARCHAR(250) NOT NULL,

- IS_DURABLE VARCHAR(1) NOT NULL,

- IS_NONCONCURRENT VARCHAR(1) NOT NULL,

- IS_UPDATE_DATA VARCHAR(1) NOT NULL,

- REQUESTS_RECOVERY VARCHAR(1) NOT NULL,

- JOB_DATA BLOB NULL,

- PRIMARY KEY (SCHED_NAME,JOB_NAME,JOB_GROUP))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_TRIGGERS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- TRIGGER_NAME VARCHAR(200) NOT NULL,

- TRIGGER_GROUP VARCHAR(200) NOT NULL,

- JOB_NAME VARCHAR(200) NOT NULL,

- JOB_GROUP VARCHAR(200) NOT NULL,

- DESCRIPTION VARCHAR(250) NULL,

- NEXT_FIRE_TIME BIGINT(13) NULL,

- PREV_FIRE_TIME BIGINT(13) NULL,

- PRIORITY INTEGER NULL,

- TRIGGER_STATE VARCHAR(16) NOT NULL,

- TRIGGER_TYPE VARCHAR(8) NOT NULL,

- START_TIME BIGINT(13) NOT NULL,

- END_TIME BIGINT(13) NULL,

- CALENDAR_NAME VARCHAR(200) NULL,

- MISFIRE_INSTR SMALLINT(2) NULL,

- JOB_DATA BLOB NULL,

- PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

- FOREIGN KEY (SCHED_NAME,JOB_NAME,JOB_GROUP)

- REFERENCES QRTZ_JOB_DETAILS(SCHED_NAME,JOB_NAME,JOB_GROUP))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_SIMPLE_TRIGGERS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- TRIGGER_NAME VARCHAR(200) NOT NULL,

- TRIGGER_GROUP VARCHAR(200) NOT NULL,

- REPEAT_COUNT BIGINT(7) NOT NULL,

- REPEAT_INTERVAL BIGINT(12) NOT NULL,

- TIMES_TRIGGERED BIGINT(10) NOT NULL,

- PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

- FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

- REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_CRON_TRIGGERS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- TRIGGER_NAME VARCHAR(200) NOT NULL,

- TRIGGER_GROUP VARCHAR(200) NOT NULL,

- CRON_EXPRESSION VARCHAR(120) NOT NULL,

- TIME_ZONE_ID VARCHAR(80),

- PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

- FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

- REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_SIMPROP_TRIGGERS

- (

- SCHED_NAME VARCHAR(120) NOT NULL,

- TRIGGER_NAME VARCHAR(200) NOT NULL,

- TRIGGER_GROUP VARCHAR(200) NOT NULL,

- STR_PROP_1 VARCHAR(512) NULL,

- STR_PROP_2 VARCHAR(512) NULL,

- STR_PROP_3 VARCHAR(512) NULL,

- INT_PROP_1 INT NULL,

- INT_PROP_2 INT NULL,

- LONG_PROP_1 BIGINT NULL,

- LONG_PROP_2 BIGINT NULL,

- DEC_PROP_1 NUMERIC(13,4) NULL,

- DEC_PROP_2 NUMERIC(13,4) NULL,

- BOOL_PROP_1 VARCHAR(1) NULL,

- BOOL_PROP_2 VARCHAR(1) NULL,

- PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

- FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

- REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_BLOB_TRIGGERS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- TRIGGER_NAME VARCHAR(200) NOT NULL,

- TRIGGER_GROUP VARCHAR(200) NOT NULL,

- BLOB_DATA BLOB NULL,

- PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

- INDEX (SCHED_NAME,TRIGGER_NAME, TRIGGER_GROUP),

- FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

- REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_CALENDARS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- CALENDAR_NAME VARCHAR(200) NOT NULL,

- CALENDAR BLOB NOT NULL,

- PRIMARY KEY (SCHED_NAME,CALENDAR_NAME))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_PAUSED_TRIGGER_GRPS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- TRIGGER_GROUP VARCHAR(200) NOT NULL,

- PRIMARY KEY (SCHED_NAME,TRIGGER_GROUP))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_FIRED_TRIGGERS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- ENTRY_ID VARCHAR(95) NOT NULL,

- TRIGGER_NAME VARCHAR(200) NOT NULL,

- TRIGGER_GROUP VARCHAR(200) NOT NULL,

- INSTANCE_NAME VARCHAR(200) NOT NULL,

- FIRED_TIME BIGINT(13) NOT NULL,

- SCHED_TIME BIGINT(13) NOT NULL,

- PRIORITY INTEGER NOT NULL,

- STATE VARCHAR(16) NOT NULL,

- JOB_NAME VARCHAR(200) NULL,

- JOB_GROUP VARCHAR(200) NULL,

- IS_NONCONCURRENT VARCHAR(1) NULL,

- REQUESTS_RECOVERY VARCHAR(1) NULL,

- PRIMARY KEY (SCHED_NAME,ENTRY_ID))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_SCHEDULER_STATE (

- SCHED_NAME VARCHAR(120) NOT NULL,

- INSTANCE_NAME VARCHAR(200) NOT NULL,

- LAST_CHECKIN_TIME BIGINT(13) NOT NULL,

- CHECKIN_INTERVAL BIGINT(13) NOT NULL,

- PRIMARY KEY (SCHED_NAME,INSTANCE_NAME))

- ENGINE=InnoDB;

-

- CREATE TABLE QRTZ_LOCKS (

- SCHED_NAME VARCHAR(120) NOT NULL,

- LOCK_NAME VARCHAR(40) NOT NULL,

- PRIMARY KEY (SCHED_NAME,LOCK_NAME))

- ENGINE=InnoDB;

-

- CREATE INDEX IDX_QRTZ_J_REQ_RECOVERY ON QRTZ_JOB_DETAILS(SCHED_NAME,REQUESTS_RECOVERY);

- CREATE INDEX IDX_QRTZ_J_GRP ON QRTZ_JOB_DETAILS(SCHED_NAME,JOB_GROUP);

-

- CREATE INDEX IDX_QRTZ_T_J ON QRTZ_TRIGGERS(SCHED_NAME,JOB_NAME,JOB_GROUP);

- CREATE INDEX IDX_QRTZ_T_JG ON QRTZ_TRIGGERS(SCHED_NAME,JOB_GROUP);

- CREATE INDEX IDX_QRTZ_T_C ON QRTZ_TRIGGERS(SCHED_NAME,CALENDAR_NAME);

- CREATE INDEX IDX_QRTZ_T_G ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_GROUP);

- CREATE INDEX IDX_QRTZ_T_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_STATE);

- CREATE INDEX IDX_QRTZ_T_N_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP,TRIGGER_STATE);

- CREATE INDEX IDX_QRTZ_T_N_G_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_GROUP,TRIGGER_STATE);

- CREATE INDEX IDX_QRTZ_T_NEXT_FIRE_TIME ON QRTZ_TRIGGERS(SCHED_NAME,NEXT_FIRE_TIME);

- CREATE INDEX IDX_QRTZ_T_NFT_ST ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_STATE,NEXT_FIRE_TIME);

- CREATE INDEX IDX_QRTZ_T_NFT_MISFIRE ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME);

- CREATE INDEX IDX_QRTZ_T_NFT_ST_MISFIRE ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME,TRIGGER_STATE);

- CREATE INDEX IDX_QRTZ_T_NFT_ST_MISFIRE_GRP ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME,TRIGGER_GROUP,TRIGGER_STATE);

-

- CREATE INDEX IDX_QRTZ_FT_TRIG_INST_NAME ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,INSTANCE_NAME);

- CREATE INDEX IDX_QRTZ_FT_INST_JOB_REQ_RCVRY ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,INSTANCE_NAME,REQUESTS_RECOVERY);

- CREATE INDEX IDX_QRTZ_FT_J_G ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,JOB_NAME,JOB_GROUP);

- CREATE INDEX IDX_QRTZ_FT_JG ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,JOB_GROUP);

- CREATE INDEX IDX_QRTZ_FT_T_G ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP);

- CREATE INDEX IDX_QRTZ_FT_TG ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,TRIGGER_GROUP);

-

- commit;

\ No newline at end of file

diff --git a/sql/soft_version b/sql/soft_version

index e6d5cb833c..1cc5f657e0 100644

--- a/sql/soft_version

+++ b/sql/soft_version

@@ -1 +1 @@

-1.0.2

\ No newline at end of file

+1.1.0

\ No newline at end of file

diff --git a/sql/upgrade/1.1.0_schema/mysql/escheduler_ddl.sql b/sql/upgrade/1.1.0_schema/mysql/escheduler_ddl.sql

new file mode 100644

index 0000000000..c43b3d86b0

--- /dev/null

+++ b/sql/upgrade/1.1.0_schema/mysql/escheduler_ddl.sql

@@ -0,0 +1,41 @@

+SET sql_mode=(SELECT REPLACE(@@sql_mode,'ONLY_FULL_GROUP_BY',''));

+

+-- ac_escheduler_T_t_escheduler_process_definition_C_tenant_id

+drop PROCEDURE if EXISTS ac_escheduler_T_t_escheduler_process_definition_C_tenant_id;

+delimiter d//

+CREATE PROCEDURE ac_escheduler_T_t_escheduler_process_definition_C_tenant_id()

+ BEGIN

+ IF NOT EXISTS (SELECT 1 FROM information_schema.COLUMNS

+ WHERE TABLE_NAME='t_escheduler_process_definition'

+ AND TABLE_SCHEMA=(SELECT DATABASE())

+ AND COLUMN_NAME='tenant_id')

+ THEN

+ ALTER TABLE `t_escheduler_process_definition` ADD COLUMN `tenant_id` int(11) NOT NULL DEFAULT -1 COMMENT '租户id' AFTER `timeout`;

+ END IF;

+ END;

+

+d//

+

+delimiter ;

+CALL ac_escheduler_T_t_escheduler_process_definition_C_tenant_id;

+DROP PROCEDURE ac_escheduler_T_t_escheduler_process_definition_C_tenant_id;

+

+-- ac_escheduler_T_t_escheduler_process_instance_C_tenant_id

+drop PROCEDURE if EXISTS ac_escheduler_T_t_escheduler_process_instance_C_tenant_id;

+delimiter d//

+CREATE PROCEDURE ac_escheduler_T_t_escheduler_process_instance_C_tenant_id()

+ BEGIN

+ IF NOT EXISTS (SELECT 1 FROM information_schema.COLUMNS

+ WHERE TABLE_NAME='t_escheduler_process_instance'

+ AND TABLE_SCHEMA=(SELECT DATABASE())

+ AND COLUMN_NAME='tenant_id')

+ THEN

+ ALTER TABLE `t_escheduler_process_instance` ADD COLUMN `tenant_id` int(11) NOT NULL DEFAULT -1 COMMENT '租户id' AFTER `timeout`;

+ END IF;

+ END;

+

+d//

+

+delimiter ;

+CALL ac_escheduler_T_t_escheduler_process_instance_C_tenant_id;

+DROP PROCEDURE ac_escheduler_T_t_escheduler_process_instance_C_tenant_id;

diff --git a/sql/upgrade/1.1.0_schema/mysql/escheduler_dml.sql b/sql/upgrade/1.1.0_schema/mysql/escheduler_dml.sql

new file mode 100644

index 0000000000..e69de29bb2

| |  +

+  +

+  +

+  diff --git a/docs/zh_CN/系统使用手册.md b/docs/zh_CN/系统使用手册.md

index d29cde0050..b4d6d6bd9b 100644

--- a/docs/zh_CN/系统使用手册.md

+++ b/docs/zh_CN/系统使用手册.md

@@ -60,7 +60,7 @@

### 执行流程定义

- **未上线状态的流程定义可以编辑,但是不可以运行**,所以先上线工作流

> 点击工作流定义,返回流程定义列表,点击”上线“图标,上线工作流定义。

-

+

> "下线"工作流之前,要先将定时管理的定时下线,才能成功下线工作流定义

- 点击”运行“,执行工作流。运行参数说明:

@@ -98,28 +98,28 @@

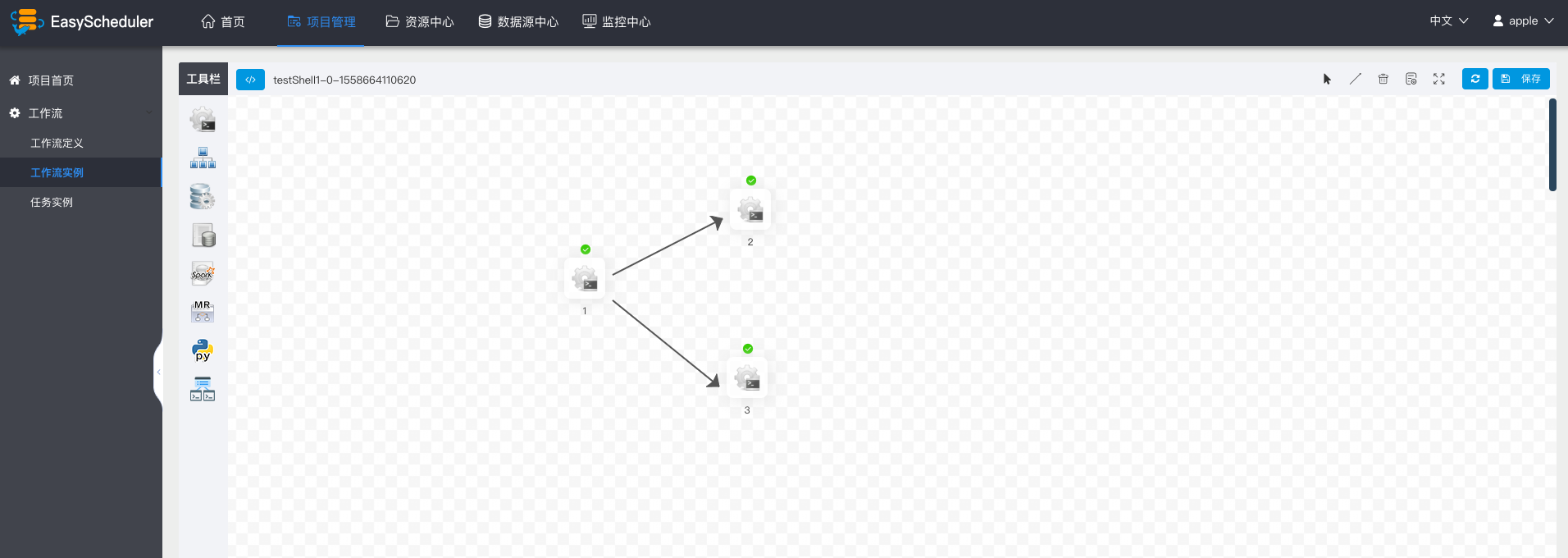

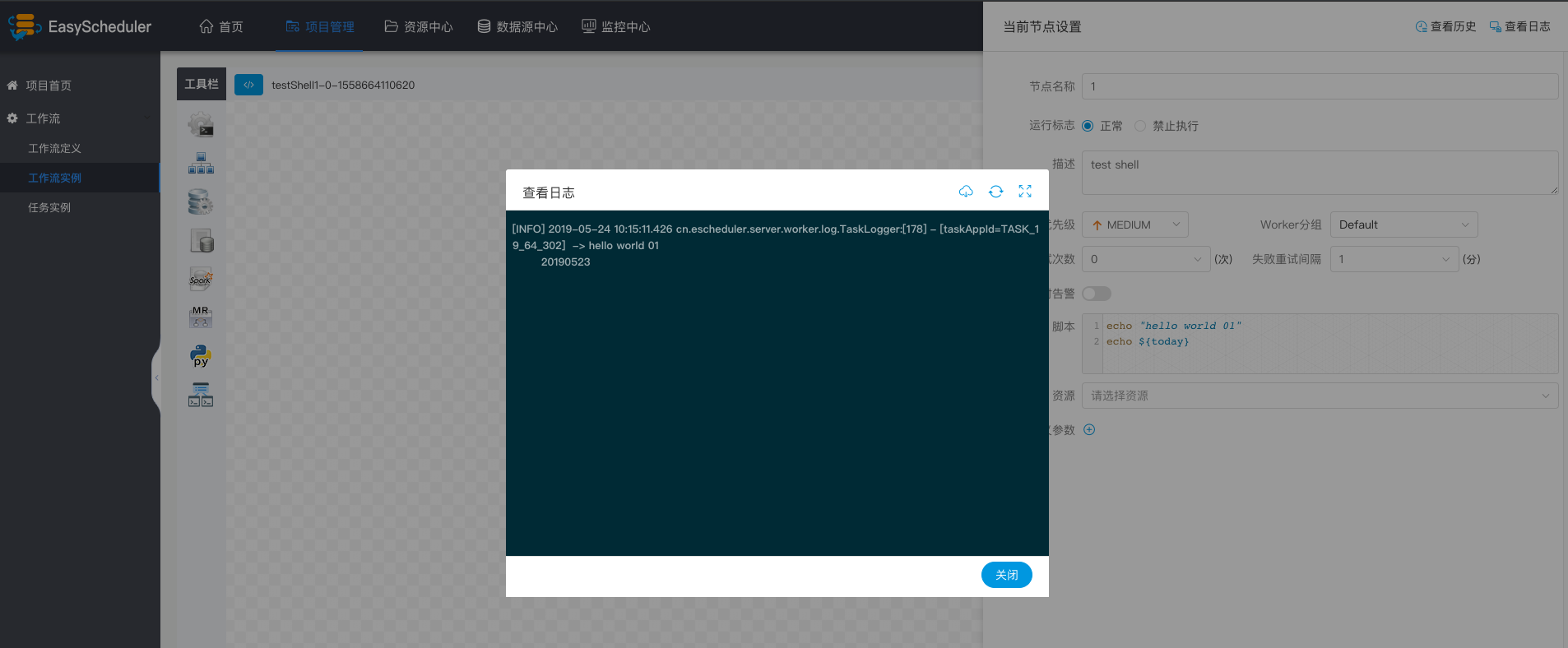

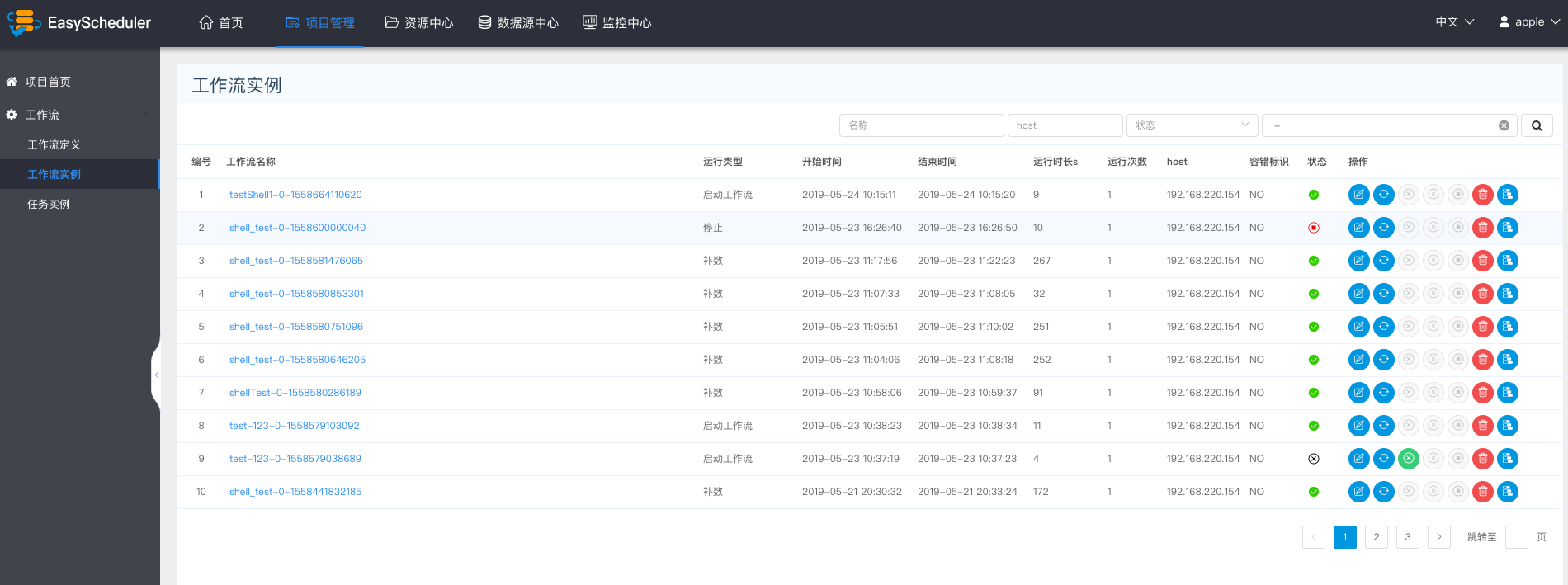

### 查看流程实例

> 点击“工作流实例”,查看流程实例列表。

-

+

> 点击工作流名称,查看任务执行状态。

-

+

diff --git a/docs/zh_CN/系统使用手册.md b/docs/zh_CN/系统使用手册.md

index d29cde0050..b4d6d6bd9b 100644

--- a/docs/zh_CN/系统使用手册.md

+++ b/docs/zh_CN/系统使用手册.md

@@ -60,7 +60,7 @@

### 执行流程定义

- **未上线状态的流程定义可以编辑,但是不可以运行**,所以先上线工作流

> 点击工作流定义,返回流程定义列表,点击”上线“图标,上线工作流定义。

-

+

> "下线"工作流之前,要先将定时管理的定时下线,才能成功下线工作流定义

- 点击”运行“,执行工作流。运行参数说明:

@@ -98,28 +98,28 @@

### 查看流程实例

> 点击“工作流实例”,查看流程实例列表。

-

+

> 点击工作流名称,查看任务执行状态。

-

+

+

+

+

+  +

+  @@ -287,7 +303,7 @@ conf/common/hadoop.properties

#### 资源管理

> 资源管理和文件管理功能类似,不同之处是资源管理是上传的UDF函数,文件管理上传的是用户程序,脚本及配置文件

-

+

* 上传udf资源

> 和上传文件相同。

@@ -303,7 +319,7 @@ conf/common/hadoop.properties

- 参数:用来标注函数的输入参数

- 数据库名:预留字段,用于创建永久UDF函数

- UDF资源:设置创建的UDF对应的资源文件

-

+

@@ -287,7 +303,7 @@ conf/common/hadoop.properties

#### 资源管理

> 资源管理和文件管理功能类似,不同之处是资源管理是上传的UDF函数,文件管理上传的是用户程序,脚本及配置文件

-

+

* 上传udf资源

> 和上传文件相同。

@@ -303,7 +319,7 @@ conf/common/hadoop.properties

- 参数:用来标注函数的输入参数

- 数据库名:预留字段,用于创建永久UDF函数

- UDF资源:设置创建的UDF对应的资源文件

-

+